NYT opinion piece title: Effective Altruism Is Flawed. But What’s the Alternative? (archive.org)

lmao, what alternatives could possibly exist? have you thought about it, like, at all? no? oh...

(also, pet peeve, maybe bordering on pedantry, but why would you even frame this as singular alternative? The alternative doesn't exist, but there are actually many alternatives that have fewer flaws).

You don’t hear so much about effective altruism now that one of its most famous exponents, Sam Bankman-Fried, was found guilty of stealing $8 billion from customers of his cryptocurrency exchange.

Lucky souls haven't found sneerclub yet.

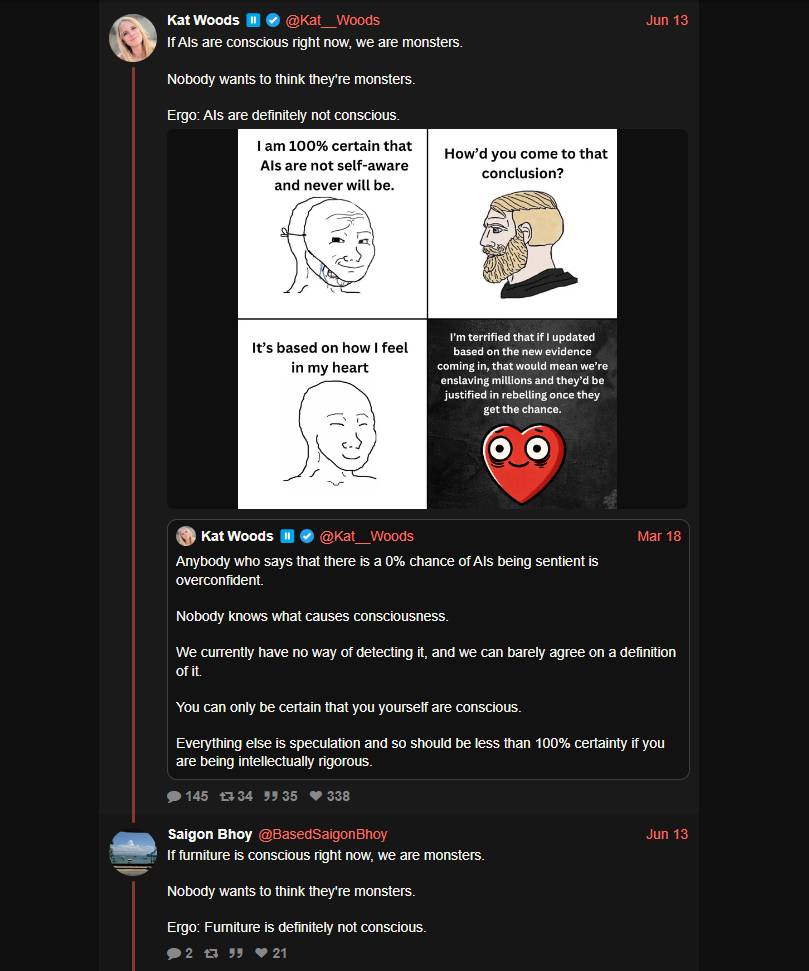

But if you read this newsletter, you might be the kind of person who can’t help but be intrigued by effective altruism. (I am!) Its stated goal is wonderfully rational in a way that appeals to the economist in each of us...

rational_economist.webp

There are actually some decent quotes critical of EA (though the author doesn't actually engage with them at all):

The problem is that “E.A. grew up in an environment that doesn’t have much feedback from reality,” Wenar told me.

Wenar referred me to Kate Barron-Alicante, another skeptic, who runs Capital J Collective, a consultancy on social-change financial strategies, and used to work for Oxfam, the anti-poverty charity, and also has a background in wealth management. She said effective altruism strikes her as “neo-colonial” in the sense that it puts the donors squarely in charge, with recipients required to report to them frequently on the metrics they demand. She said E.A. donors don’t reflect on how the way they made their fortunes in the first place might contribute to the problems they observe.