just read this https://www.wheresyoured.at/were-watching-facebook-die/ and realized that zucc burned on metaverse enough money to push 15 or so new pharmaceuticals from bench to market

behold, effective allocation of resources under capitalism

Big brain tech dude got yet another clueless take over at HackerNews etc? Here's the place to vent. Orange site, VC foolishness, all welcome.

This is not debate club. Unless it’s amusing debate.

For actually-good tech, you want our NotAwfulTech community

just read this https://www.wheresyoured.at/were-watching-facebook-die/ and realized that zucc burned on metaverse enough money to push 15 or so new pharmaceuticals from bench to market

behold, effective allocation of resources under capitalism

This gem from 25 year old Avital Balwit the Chief of Staff at Anthropic and researcher of "transformative AI at Oxford’s Future of Humanity Institute" discussing the end of labour as she knows it. She continues:

"The general reaction to language models among knowledge workers is one of denial. They grasp at the ever diminishing number of places where such models still struggle, rather than noticing the ever-growing range of tasks where they have reached or passed human level. [wherein I define human level from my human level reasoning benchmark that I have overfitted my model to by feeding it the test set] Many will point out that AI systems are not yet writing award-winning books, let alone patenting inventions. But most of us also don’t do these things. "

Ah yes, even though the synthetic text machine has failed to achieve a basic understanding of the world generation after generation, it has been able to produce ever larger volumes of synthetic text! The people who point out that it still fails basic arithmetic tasks are the ones who are in denial, the god machine is nigh!

Bonus sneer:

Ironically, the first job to go the way of the dodo was researcher at FHI, so I understand why she's trying to get ahead of the fallout of losing her job as chief Dario Amodei wrangler at OpenAI2: electric boogaloo.

Idk, I'm still workshopping this one.

🐍

Many will point out that AI systems are not yet writing award-winning books, […]

Holy shit, these chucklefucks are so full of themselves. To them, art and expression and invention are really just menial tasks which ought to be automated away, aren’t they? They claim to be so smart but constantly demonstrate they’re too stupid to understand that literature is more than big words on a page, and that all their LLMs need to do to replace artists is to make their autocomplete soup pretentious enough that they can say: This is deep, bro.

I can’t wait for the first AI-brained litbro trying to sell some LLM’s hallucinations as the Finnegans Wake of our age.

Many will point out that magic eight balls are not yet writing award-winning books, let alone patenting inventions. But most of us also don’t do these things.

I maintain that people not having to work is a worst-case scenario for silicon valley VCs who rely on us being too fucking distracted by all their shit products to have time to think about whether we need their shit products

https://news.ycombinator.com/item?id=40611600

It will never stop being incredibly funny that in the past 24 months an untold legion of people have emerged from heroic doses of shrooms/LSD clutching a note that just has “what if u could chat with a pdf???” scrawled on it

You laugh, but people in incel circles are heralding the nascent arrival of better than real AI girlfriends.

Now I am laughing harder.

The incels have also been crying about how the upcoming robot sexbots/ai will end women forever for a decade now so it isn't anything new. (They prob are shouting about it more, but they have been obsessed by this idea already in the past).

All I can say is bring it on. These guys seem to assume women will be furious about being replaced in this hypothetical scenario. I imagine most women would be relieved to have more free time and greater opportunities. Losing your role is only scary if you have benefited from your social position.

I seen someone on LinkedIn yesterday talking about how you'll be able to "chat with your database"

Like, what if i don't want to be friends with a database? What next ? a few beers with my fridge? Skinny dipping with my toaster?

Both options would be horrible, of course, but they'd both be better than spending another five fucking minutes on LinkedIn.

You know, I have no direct information as to what kind of background those people might’ve had for their trips, but 1337% it was wrong (or this person is just full of it). Set and setting. Always. And dear god if I came out of a trip with that? What a fucking abysmal prospect

And yet holy fuck that’s quite possibly a strong driver in all this insanity isn’t it?

You could guess my internal state right now, and you’d need zero psychedelics to do it

it’s a fucking tragedy what techbros, fascists, and gurus have done to the perception of what a trip even is. there’s this hilariously toxic idea that a trip must have utility or else you’ve wasted your time and material; these people will gatekeep and control how you process what should be a highly personal experience, because that gives them a subtle but powerful amount of influence over what you’ll take back from your trip. the Silicon Valley/TESCREAL crowd have even ritualized bad set and setting so much that they don’t need a guru personally present in order to have a bad fucking time.

the damage these fucking fools have done is difficult to quantify, largely because psychedelic research is either banned or firmly in the hands of those very same fucking fools. it’s very important to them that you don’t use your temporarily jailbroken neocortex for your own purposes; that you never imagine a world where they don’t matter.

yep. a couple of years ago I read We Will Call It Pala and it hit pretty fucking on the nose

a couple years later, with all the dipshittery i've seen the clowns give airtime, and I'm kinda afraid? reticent? to read it again

Well would you fucking believe it: it turns out that deplatforming your horrible arseholes fixes the problems! (archive)

(well, until one of the problems scrapes together $44b and buys the platform)

every fuckin time

bsky comment:

the number of times i have seen even a small private forum or discord server go from "daily fights" to "everyone gets along" by just banning one or two loud assholes is uncountable

one of the follies of the early non-research internet was that the folks in charge of the more influential communities (Something Awful comes to mind) all tended to be weird fucking libertarian assholes insisting that debating fascists in a “free and fair environment” (whatever that is) must be a good thing, we can’t just ban them on sight for some reason. generally these weird libertarian assholes were motivated by typical weird libertarian asshole things — greed, or being fascists themselves.

and all of these horseshit policies around not banning fascists ended in complete disaster for those communities and those libertarian shitheads (SA again), but somehow they’re practically the only element of those early communities that carried over to the modern internet, likely because caring about community quality doesn’t make money, but pandering to nazi fuckwits does.

it probably goes without saying, but I take the apparently radical stance that nobody needs to interact with fascists and assholes and I won’t give their bullshit transit on any system I control. it’s always been surprising to me how many sysops consider “kick out the fucking fascists” an unworkable policy when it’s very easy to implement — it only gets hard when you allow the worst fucking people you know to gain a foothold.

Check out the big brain on Yud!

I think the pre-election prosecutions of Hillary and Trump were both bullshit. Is there anyone on Earth who holds that Hillary's email server and Trump's lawyer's payment's accounting were both Terribly Serious Crimes, and can document having held the former position earlier?

Ah yes the famous trial where HRC was prosecuted and convicted, I remember that, very analogous to Trump’s case, yes.

Big Yud has been huffing AI farts for so long he’s starting to hallucinate like one!

edit the replies once again prove that a blue check is an instant -10 point to credibility.

My moderate distaste for people who capitalise words To Imply Some Other Meaning without Directly Stating It knows no Bounds.

my Absolute Fondness for Doing So remains... and I can't emphasize this enough - unchanged. that's it. that's the post

A few months ago I was looking into Lojban and trying to figure out how I would translate "charge" (as in, "my laptop is charging") and the best I could come up with is "pinxe lo dikca" ("drink electricity")

So... if you think LLMs don't drink, that's your imagination, not mine.

My parents said that the car was "thirsty" if the gas tank was nearly empty, therefore gas cars are sentient and electric vehicles are murder, checkmate atheists

That was in the replies to this, which Yud retweeted:

Hats off to Isaac Asimov for correctly predicting exactly this 75 years ago in I, Robot: Some people won't accept anything that doesn't eat, drink, and eventually die as being sentient.

Um, well, actually, mortality was a precondition of humanity, not of sentience, and that was in "The Bicentennial Man", not I, Robot. It's also presented textually as correct....

In the I, Robot story collection, Stephen Byerley eats, drinks and dies, and none of this is proof that he was human and not a robot.

Why are techbros such shit at Lojban? It's a recurring and silly pattern. Two minutes with a dictionary tells me that there is {seldikca} for being charged like a capacitor and {nenzengau} for charging like a rechargeable battery.

Incredible Richard Stallman vibe in this picture (this is a compliment)

Person replying to someone saying they are not a cult leader by comparing them to another person often seen as a cult leader.

🙃

Not a cult just a following. Like Andrew Tate for nerds

Edit: somebody also Did The Math (xcancel) "I eyeballed the rough numbers from your graph then re-plotted it as a linear rather than a logarithmic scale, because they always make me suspicious. You're predicting the effective compute is going to increase about twenty quadrillion times in a decade. That seems VERY unlikely."

i really, really don't get how so many people are making the leaps from "neural nets are effective at text prediction" to "the machine learns like a human does" to "we're going to be intellectually outclassed by Microsoft Clippy in ten years".

like it's multiple modes of failing to even understand the question happening at once. i'm no philosopher; i have no coherent definition of "intelligence", but it's also pretty obvious that all LLM's are doing is statistical extrapolation on language. i'm just baffled at how many so-called enthusiasts and skeptics alike just... completely fail at the first step of asking "so what exactly is the program doing?"

The y-axis is absolute eye bleach. Also implying that an "AI researcher" has the effective compute of 10^6 smart high schoolers. What the fuck are these chodes smoking?

this article/dynamic comes to mind for me in this, along with a toot I saw the other day but don't currently have the link for. the toot detailed a story of some teacher somewhere speaking about ai hype, making a pencil or something personable with googly eyes and making it "speak", then breaking it in half the moment people were even slightly "engaged" with the idea of a person'd pencil - the point of it was that people are remarkably good at seeing personhood/consciousness/etc in things where it just outright isn't there

(combined with a bit of en vogue hype wave fuckery, where genpop follows and uses this stuff, but they're not quite the drivers of the itsintelligent.gif crowd)

Straight line on a lin-log chart, getting crypto flashbacks.

I think technically the singularitarians were way ahead of them on the lin-log charts lines. Have a nice source (from 2005).

Similar vibes in this crazy document

EDIT it's the same dude who was retweeted

https://situational-awareness.ai/

AGI by 2027 is strikingly plausible. GPT-2 to GPT-4 took us from ~preschooler to ~smart high-schooler abilities in 4 years. Tracing trendlines in compute (~0.5 orders of magnitude or OOMs/year), algorithmic efficiencies (~0.5 OOMs/year), and “unhobbling” gains (from chatbot to agent), we should expect another preschooler-to-high-schooler-sized qualitative jump by 2027.

Last I checked ChatGPT can't even do math, which I believe is a prerequisite for being considered a smart high-schooler. But what do I know, I don't have AI brain.

I just want to share this HN comment because it was the worst thing I've read all day.

Wait until they have kids. The deafness gene will be passed along. Soon enough we'll be like the cars with the hardware without the software or the locked features.

A treatment for a type of genetic deafness sounds good and all; but think of the implications man. The no-longer-deaf people might hear some steamy music and then get frisky and in the mood to make deaf babies. And next thing you know bam! There'll be activation keys for hearing.

Who is more evolutionarily fit: a deaf person who appreciates technological progress and has kids, or an unloved eugenics enjoyer posting on hacker news?

I expect SneerClub to provide my alibis when reading one of these finally makes me snap.

Apparently deaf people never have kids. Hmm. Alright. Okay.

This guy isn't even the first techbro to suggest recently that if disabled people are allowed to have kids, eventually we will all be disabled. I know very very little about genetics, but I'm still pretty sure it doesn't work that way.

for the sneerclub fans, this vid about MS Satoshi was pretty funny. All the Adam Something videos are entertaining for a couch sneer https://www.youtube.com/watch?v=dv4H4trnssc

Always a good sign when your big plan is virtually identical to L. Ron Hubbard's big plan in the 70s.

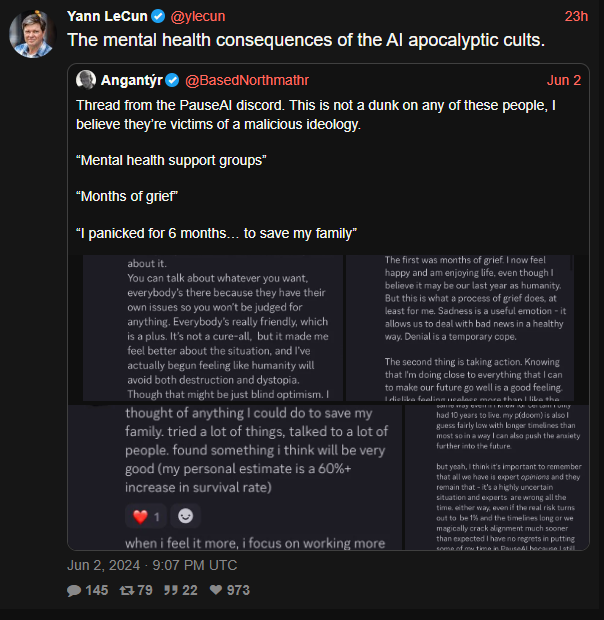

Not a sneer, just a feelsbadman.jpg b.c. I know peeps who have been sucked into this "its all Joever.png mentality", (myself included for various we live in hell reasons, honestly I never recovered after my cousin explained to me what nukes were while playing in the sandbox at 3)

The sneerworthy content comes later:

1st) Rats never fail to impress with appeal to authority fallacy, but 2nd) the authority in question is max totally unbiased not a member of the extinction cult and definitely not pushing crank theories for decades fuckin' tegmark roflmaou

"You know, we just had a little baby, and I keep asking myself... how old is he even gonna get?"

Tegmark, you absolute fucking wanker. If you actually believe your eschatological x-risk nonsense and still produced a child despite being convinced that he's going to be paperclipped in a few years, you're a sadistic egomaniacal piece of shit. And if you don't believe it and just lie for the PR, knowingly leading people into depression and anxiety, you're also a sadistic egomaniacal piece of shit.

Truly I say unto you , it is easier for a camel to pass through the eye of a needle than it is to convince a 57 year old man who thinks he's still pulling off that leather jacket to wear a condom. (Tegmark 19:24, KJ Version)

So apparently Mozilla has decided to jump on the bandwagon and add a roided Clippy to Firefox.

I’m conflicted about this. On the one hand, the way they present it, accessibility does seem to be one of the very few non-shitty uses of LLMs I can think of, plus it’s not cloud-based. On the other hand, it’s still throwing resources onto a problem that can and should be solved elsewhere.

At least they acknowledge the resource issue and claim that their small model is more environmentally friendly and carbon-efficient, but I can’t verify this and remain skeptical by default until someone can independently confirm it.

The accessibility community is pretty divided on AI hype in general and this feature is no exception. Making it easier to add alt is good. But even if the image recognition tech were good enough—and it’s not, yet—good alt is context dependent and must be human created.

Even if it’s just OCR folks are ambivalent. Many assistive techs have native OCR they’ll do automatically, and it’s better, usually. But not all, and many AT users don’t know how to access the text recognition them when they have it.

Personally I’d rather improve the ML functionality and UX on the assistive tech side, while improving the “create accessible content” user experiences on the authoring tool side. (Ie. Improve the braille display & screen reader ability to describe the image by putting the ML tech there, but also make it much easier for humans to craft good alt, or video captions, etc.)

I deleted a tweet yesterday about twitter finally allowing alt descriptions on images in 2022 - 25 years after they were added to the w3c spec (7 years before twitter existed) . But I added the point that OCR recommendations for screenshots of text has kinda always been possible, as long as they reliably detect that it's a screenshot of text. But thinking about the politics of that overwhelmed me, hence the delete.

Like, I'm kinda sure they already OCR all the images uploaded for meta info, but the context problem would always be there from an accessibility POV.

My perspective is that without any assistance to people unaware of accessibility issues with images beyond "would you like to add an alt description" leaves the politics of it all between the people using twitter. I don't really like seeing people being berated for not adding alt text to their image as if twitter is not the third-party that cultivated a community for 17 years without ALT descriptions, then they suddenly throw them out there and let us deal with it amongst ourselves.

Anyway... I will stick to what I know in future

From the Philosophy Tube comment section:

Video is way too long. But I did go to chatGPT to ask extensively about this Judith Butler character.

Trying to figure out if that comment is a bit or not, and at least one of the poster's other comments on the video is this literary masterwork:

some people have observable x chromosones and others have observable y chromosones, and those categories are good to make useful distinctions, just like I make a distinction between a chair and a sofa... I still don't believe gender is real, and I can still observe sex. Sure, sex could be an illusion, but it doesn't matter. The chair is most certainly an illusion.... At what point does wood become a chair? Is a three legged chair a chair? A two legged? A one legged? A none legged? Is a seat and a chair the same thing? What if I break the seat in half? Is it still a chair?

my friend started working for a company that does rlhf for openai et al, and every single day I wish I could post to you guys about the bizarre shit she sees this company do. they're completely off the rails