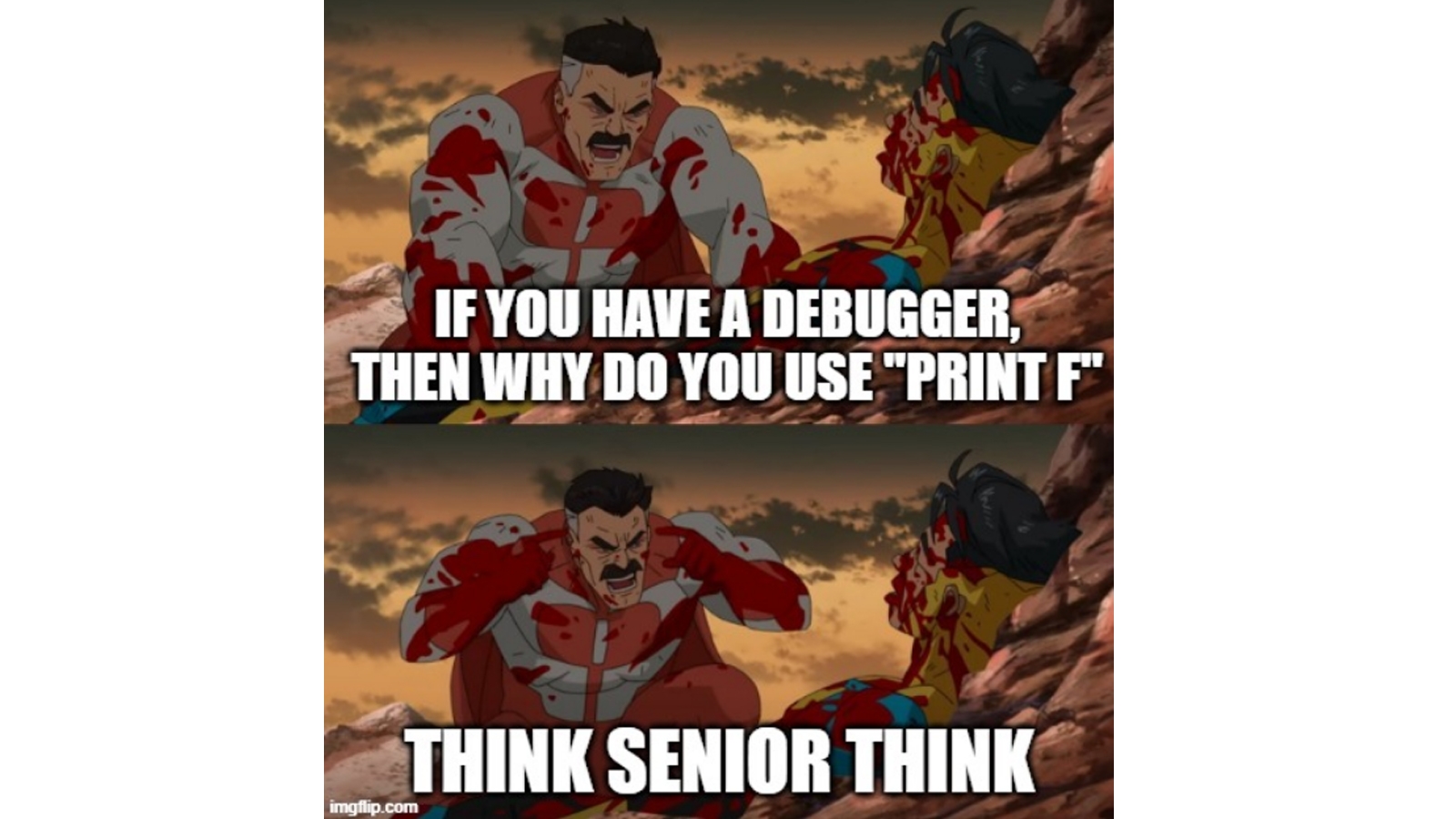

Because I have nested loops and only want to see certain cases and I'm not smart enough to set up conditional breakpoints or value watching

Programmer Humor

Post funny things about programming here! (Or just rant about your favourite programming language.)

Rules:

- Posts must be relevant to programming, programmers, or computer science.

- No NSFW content.

- Jokes must be in good taste. No hate speech, bigotry, etc.

Value watching is just printf; change my mind

Someone should make that bell curve meme with people using print statements at the newb and advanced level. Debuggers are great but I'd say it's a 50/50 split for me for debugger vs logger when I'm debugging something.

Programming is also about learning your tools :)

Looks like godot doesn't have it yet

because, sometimes, having your program vomit all over your console is the best way to remain focused on the problem.

This is the reason for me. Sometimes I don't want to step through the code and just want to scan through a giant list of output.

Similarly, every once in a while I'll throw warning messages (which I can't ship) to encourage me to go back and finish that TODO instead of letting it linger.

Exactly. And there's plenty of places where setting up a live debug stream is a massive PITA, and finding the log files is only a huge PITA.

because sometimes you need to investiagte an issue that happens only on the production machines, and you can't/shouldn't setup debugging on those.

Debugger good for microscopic surgery, log stream good for real time macro view. Both perspectives needed.

As someone who has done a lot of debugging in the past, and has also written many log analysis tools in the past, it's not an ether/or, they complement each other.

I've seen a lot of times logs are dismissed in these threads recently, and while I love the debugger (I'd boast that I know very few people who can play with gdb like I can), logs are an art, and just as essential.

The beginner printf thing is an inefficient learning stage that people will get past in their early careers after learning the debugger, but then they'll need to eventually relearn the art of proper logging too, and understand how to use both tools (logging and debugging).

There's a stage when you love prints.

Then you discover debuggers, you realize they are much more powerful. (For those of you who haven't used gdb enough, you can script it to iterate stl (or any other) containers, and test your fixes without writing any code yet.

And then, as your (and everyone else's) code has been in production a while, and some random client reports a bug that just happened for a few hard to trace events, guess what?

Logs are your best friend. You use them to get the scope of the problem, and region of problem (if you have indexing tools like splunk - much easier, though grep/awk/sort/uniq also work). You also get the input parameters, output results, and often notice the root cause without needing to spin up a debugger. Saves a lot of time for everyone.

If you can't, you replicate, often takes a bit of time, but at least your logs give you better chances at using the right parameters. Then you spin up the debugger (the heavy guns) when all else fails.

It takes more time, and you often have a lot of issues that are working at designed in production systems, and a lot of upstream/downstream issues that logs will help you with much faster.

I've spent inordinate amounts of my career going through logs from software in prod. I'm amazed anyone would dismiss their usefulness!

Ahem, I'm a senior developer and I love prints. I appreciate debuggers and use them when in a constrained situation but if the source code is easily mutable (and I work primarily in an interpreted language) then I can get denser, higher quality information with print in a format that I can more comfortably consume than a debugger (either something hard-core like gdb or some fancy IDE debugger plugin).

That said, I agree about logging the shit out of everything. It's why I'm quaking in my boots about Cisco acquiring Splunk.

Try debugging a distributed embedded real time system which crashes when you are in a breakpoint too long because the heartbeat doesn't respond

Working with Nordic Semi Bluetooth Stack was like that when working with it a few years ago. If you reach a breakpoint while the Bluetooth stack was running, it would crash the program.

So printf to dump data while it ran and only break when absolutely necessary.

Or just any dpdk program, where any gdb caused slowdown causes the code to "behave as expected"

Because it takes 3 hours to reach the error and I’ve got other shit to fix.

I found debuggers practically unusable with asynchronous code. If you've got a timeout in there, it will break, when you arrive at a breakpoint.

Theoretically, this could be solved by 'pausing' the clock that does the timeouts, but that's not trivial.

At least, I haven't seen it solved anywhere yet.

I mean, I'm still hoping, I'm wrong about the above, but at this point, I find it kind of ridiculous that debuggers are so popular to begin with.

Because it implies that synchronous code or asynchronous code without timeouts are still quite popular.

Debugger? I hardly know er

I still use print as a quick "debug" its just convenient to have multiple prints in the code when you're iterating and changing code.

I have to print f to show respect.

Quick printf vs. setting up conditions.

Embedded Oldster : "Of course, we had it rough. I had to use a single blinking red LED."

Unix Oldster : "An LED? We used to DREAM about having an LED, I still have hearing loss from sitting in front of daisy wheel printers."

Punch-card Oldster : "Luxury."

Debuggers are for when you hit a problem with your code. Logs are for when someone else hits a problem with your code.

not all problems are worth busting out the heavy machinery (until they are)

Because, sadly, I more often than not do NOT have access to a debugger.

Learning how to write good debugging print messages is a skill. And nicely transfers to writing good debug log messages. Which makes diagnosing user issues much easier.

If you’re debugging a react re-render for example where the error happens on the 7th re-render then having it pause with the debugger 7 times can be annoying.

I don't know about react, but in similar situations in java I use conditional breakpoints. Sometimes going as far as introducing a temporary variable to do the count for me, and basing my condition on that.

I honestly need to remember the debugger exists. They weren't common for interpreted languages when I got started.

Grey beards merge and increment (ie: senior devs unite and rise up)

The issue is a debugger will trigger every time. In video games at least you have code that runs as over and over again in tick. Putting debugger breakpoints in that would be useless most of the time. Print is where it's at for ticking/timer debugging.

I used to work on a debugger. It was called TotalView, and it was a really stellar multiprocess, multithread debugger. You could debug programs with thousands of cores and threads. You could type real C++ code to inspect values, inject code into a running process, force the CPU to run at a given line, like a magic goto. But we had a saying "printf wins again". Sometimes you just can't get the debugger to tell you what you need.

Coming off as a noob here, but how do you actually utilize the debugger.

Mostly working on python stuff in VS Code, sometimes I just run the code and look at the error. Sometimes I try the debug and run option but it acts the same as just running the code; there's some stuff like variables window but I don't know how to utilize the information to debug.

Debuggers allow you to step through code and inspect the program state at any moment during execution. You can even sometimes run commands inline to see how stuff reacts.

This means you can usually just hover a variable or something in your IDE while execution is paused and see its current value without having to restart execution every time you want to print something different.

You can even sometimes run commands inline to see how stuff reacts.

It's honestly not a bad way to write some of the more complex lines of code too. In python/vscode(ium), you can set a breakpoint where you're writing your code and then write the next line in the watches section. That way you can essentially see a live version of the result you're getting. I especially like this for stuff like list comprehension.

I don't know how to use vscode. In general in Python you can do breakpoint() and your code will pause there and become interactive. If you're running in docker you'll want to attach to the container. When it hits the breakpoint you basically drop into a normal python repl with extra features.

https://docs.python.org/3/library/pdb.html

This is really, really, good for debugging and developing. You can interactively examine stuff and try stuff right there. You don't have to rerun the whole thing to be like "ok what's the second item in the list? Is this syntax right to sort it?" or whatever you're trying to do

I use pdb++ for syntax highlighting and some other qol features, too.

You're gonna want logging in production...

Because if you invested a lot of time on carefully choosing the places where printf should be to get all the info you need, you just need to unset NDEBUG and voilà everything that you need is there again.

Because for all the love I have for NGRX, debugging it is kinda hard.

The debugger can't handle the chonkers app I work on.

Because it's significantly slower to analyze a series of computations and their values when stepping through them

Later on when I want to look productive I’ll delete all those printfs then pay myself on the back for committing a lot of code changes that sprint and for reducing our log storage costs by 75%

Don't delete printf statements...wrap them in a "if (DEBUG)" function. You still reduce the log storage costs while simultaneously expanding the code base and giving yourself a way to debug the system later.

I rarely use the debugger. When I do it's because I'm tired and I always regret it tbh. It's just a waste of time and clutters my screen.

The fastest way by far is to simply read and think. You have to do it any way.

The print is the last resort, I tend to use it maybe once per day. Doesn't clutter my screen and works with the shortcuts I'm used to. Totally flexible. Can comment out and share with team or reuse later. The issue is often immediately clear.

For example today I used it on a position after a multiplication with a matrix. I didn't explicitly convert it from a float3 to a float4, so w was 0, and it caused it to rotate instead of translate the position vector. Once I printed it it became clear.

Had I just read the code again and thought about what it was actually doing I would've found it more efficiently.