GPT-4's Secret Has Been Revealed

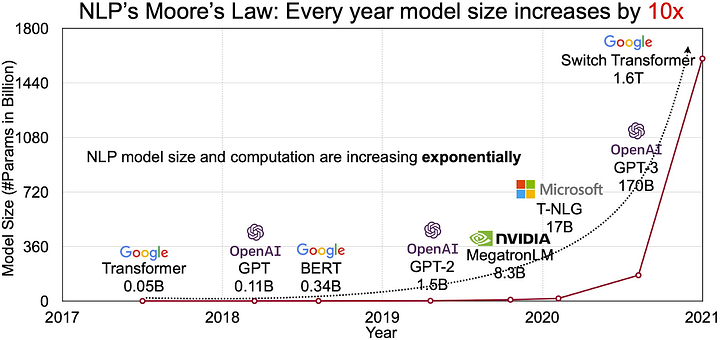

According to ithinkbot.com, GPT-4 is based on the Mixture of Experts architecture and has 1.76 trillion parameters. It is rumored to be based on eight models, each with 220 billion parameters, which are linked in the Mixture of Experts (MoE) architecture. The idea is nearly 30 years old and has been used for large language models before, such as Google's Switch Transformer.

The information about GPT-4 comes from George Hotz, founder of Comma.ai, an autonomous driving startup. Hotz is an AI expert who is also known for his hacking past: He was the first to crack the iPhone and Sony's Playstation 3. Other AI experts have also commented on Hotz's Twitter feed, saying that his information is very likely true.

Hotz also speculated that GPT-4 produces not just one output, but iteratively 16 outputs that are improved with each iteration. The open-source community could now try to replicate GPT-4's architecture and train their own models.

Citations: [1] https://matt-rickard.com/mixture-of-experts-is-gpt-4-just-eight-smaller-models [2] https://www.reddit.com/r/ChatGPT/comments/14erkut/gpt4_is_actually_8_smaller_220b_parameter_models/ [3] https://www.reddit.com/r/singularity/comments/14eojxv/gpt4_8_x_220b_experts_trained_with_different/ [4] https://thealgorithmicbridge.substack.com/p/gpt-4s-secret-has-been-revealed [5] https://www.linkedin.com/posts/stephendunn1_gpt-4s-secret-has-been-revealed-activity-7078664788276334593-X6CX [6] https://the-decoder.com/gpt-4-is-1-76-trillion-parameters-in-size-and-relies-on-30-year-old-technology/

Having an uncensored model that outperforms GPT-4 would be a dream come true. The ability to run it locally would be also be an added bonus. But I doubt it would ever be possible on a desktop pc with a 3080. One can dream.

I think like phones before it, Ai is going to get theoretically larger and larger before compactness becomes something they work towards. Someday though, maybe it'll be like sci-fi where every building/family has its own ai system.

Check out Llama ccp sometime, it's foss and you can run it without too crazy system requirements. There are different sets of parameters you can use for it, I think the 13 billion parameters set is only like 25 gigs. Definitely nowhere near as good as gpt, but still fun and hopefully will improve in the future.

7b q4 (quantized, basically compressed down to only using 4 bit precision) is about 4gb of ram, 13b q4 is about 8gb, and 30b q4 is the one that's about 25gb

30b generates slowly, but more or less usably on a CPU, the rest generate on CPU just fine

Okay awesome, that's even better than I thought. I had a friend showing it to me last night, I was thinking about trying it out today. I run a 12th gen I9 and a 2080TI, I assume I would probably get better performance on my gpu right?

It should yeah, it used to be that if you wanted to run the model on your GPU it needed to fit entirely within its VRAM (which really limited what models people could use on consumer GPUs), but I think recently they've added the ability to run part of the model on your GPU+VRAM and part of it on your CPU+RAM, although I don't know the specifics as I've only briefly played around with it

That's awesome. My system is 32gb ram with a 3080, hopefully that can work well.