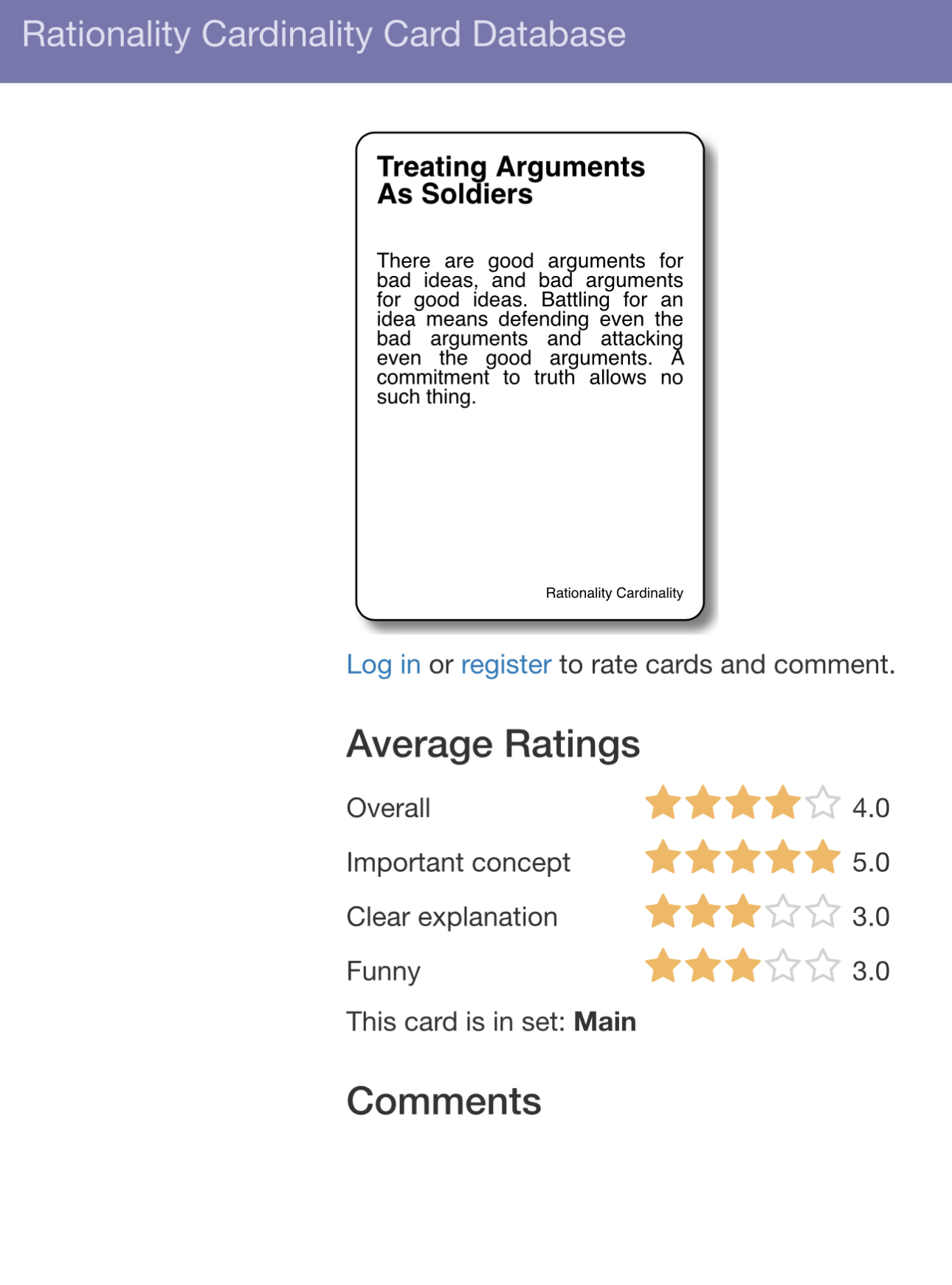

dear fuck I found their card database, which doesn’t seem to be linked from their main page (and which managed to crash its tab as soon as I clicked on the link to see all the cards spread out, because lazy loading isn’t real):

e: somehow the cards get less funny the higher the funny rating goes

e2: there’s no punchability rating but it’s desperately needed

one of the data fluffers solemnly logs me as “did not finish” as I flee the orgy