It’s like you founded a combination of an employment office and a cult temple, where the job seekers aren’t expected or required to join the cult, but the rites are still performed in the waiting room in public view.

chef's kiss

It’s like you founded a combination of an employment office and a cult temple, where the job seekers aren’t expected or required to join the cult, but the rites are still performed in the waiting room in public view.

chef's kiss

The surface claim seems to be the opposite, he says that because of Moore's law AI rates will soon be at least 10x cheaper and because of Mercury in retrograde this will cause usage to increase muchly. I read that as meaning we should expect to see chatbots pushed in even more places they shouldn't be even though their capabilities have already stagnated as per observation one.

- The cost to use a given level of AI falls about 10x every 12 months, and lower prices lead to much more use. You can see this in the token cost from GPT-4 in early 2023 to GPT-4o in mid-2024, where the price per token dropped about 150x in that time period. Moore’s law changed the world at 2x every 18 months; this is unbelievably stronger.

Saltman has a new blogpost out he calls 'Three Observations' that I feel too tired to sneer properly but I'm sure will be featured in pivot-to-ai pretty soon.

Of note that he seems to admit chatbot abilities have plateaued for the current technological paradigm, by way of offering the "observation" that model intelligence is logarithmically dependent on the resources used to train and run it (i = log( r )) so it's officially diminishing returns from now on.

Second observation is that when a thing gets cheaper it's used more, i.e. they'll be pushing even harded to shove it into everything.

Third observation is that

The socioeconomic value of linearly increasing intelligence is super-exponential in nature. A consequence of this is that we see no reason for exponentially increasing investment to stop in the near future.

which is hilarious.

The rest of the blogpost appears to mostly be fanfiction about the efficiency of their agents that I didn't read too closely.

Penny Arcade weighs in on deepseek distilling chatgpt (or whatever actually the deal is):

You misunderstand, they escalate to the max to keep themselves (including selves in parallel dimensions or far future simulations) from being blackmailed by future super intelligent beings, not to survive shootouts with border patrol agents.

I am fairly certain Yud had said something very close to that effect in reference to preventing blackmail from the basilisk, even though he tries to no-true-scotchman zizians wrt his functional decision 'theory' these days.

Distilling is supposed to be a shortcut to creating a quality training dataset by using the output of an established model as labels, i.e. desired answers.

The end result of the new model ending up with biases inherited from the reference model should hold, but using as a base model the same model you are distilling from would seem to be completely pointless.

The 671B model although 'open sourced' is a 400+GB download and is definitely not runnable on household hardware.

Taylor said the group believes in timeless decision theory, a Rationalist belief suggesting that human decisions and their effects are mathematically quantifiable.

Seems like they gave up early if they don't bring up how it was developed specifically for deals with the (acausal, robotic) devil, and also awfully nice of them to keep Yud's name out of it.

edit: Also in lieu of explanation they link to the wikipedia page on rationalism as a philosophical movement which of course has fuck all to do with the bay area bayes cargo cult, despite it having a small mention there, with most of the Talk: page being about how it really shouldn't.

NYT and WaPo are his specific examples. He also wants a connection to "a policy/defense/intelligence/foreign affairs journal/magazine" if possible.

Today on highlighting random rat posts from ACX:

(Current first post on today's SSC open thread)

On slightly more relevant news the main post is scoot asking if anyone can put him in contact with someone from a major news publication so he can pitch an op-ed by a notable ex-OpenAI researcher that will be ghost-written by him (meaning siskind) on the subject of how they (the ex researcher) opened a forecast market that predicts ASI by the end of Trump's term, so be on the lookout for that when it materializes I guess.

wrong thread :(

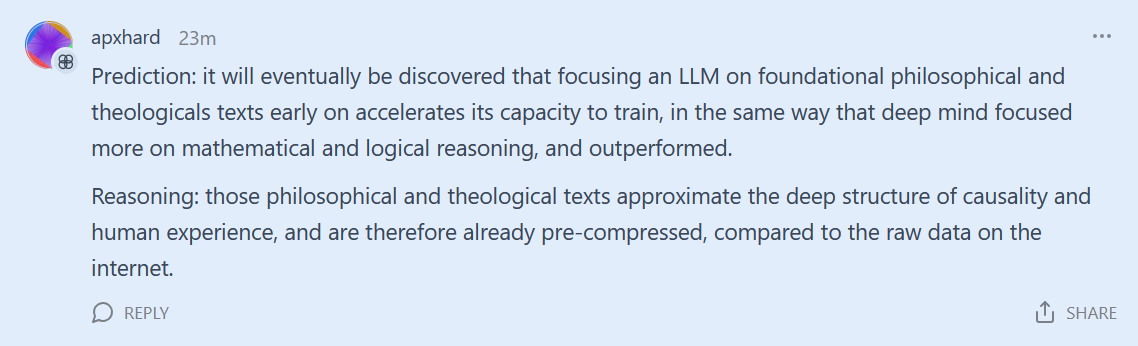

Could also be don't worry about deepseek type messaging that addresses concerns without naming names, to tell us that a drastic reduction in infrastructure costs was foretold by the writing of St Moore and was thus always inevitable on the way to immanentizing the AGI, ἀλληλούϊα.