See THIS POST

Notice- the 2,000 upvotes?

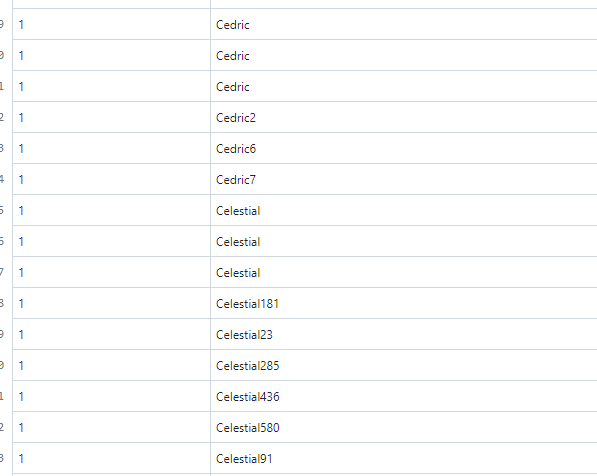

https://gist.github.com/XtremeOwnageDotCom/19422927a5225228c53517652847a76b

It's mostly bot traffic.

Important Note

The OP of that post did admit, to purposely using bots for that demonstration.

I am not making this post, specifically for that post. Rather- we need to collectively organize, and find a method.

Defederation is a nuke from orbit approach, which WILL cause more harm then good, over the long run.

Having admins proactively monitor their content and communities helps- as does enabling new user approvals, captchas, email verification, etc. But, this does not solve the problem.

The REAL problem

But, the real problem- The fediverse is so open, there is NOTHING stopping dedicated bot owners and spammers from...

- Creating new instances for hosting bots, and then federating with other servers. (Everything can be fully automated to completely spin up a new instance, in UNDER 15 seconds)

- Hiring kids in africa and india to create accounts for 2 cents an hour. NEWS POST 1 POST TWO

- Lemmy is EXTREMELY trusting. For example, go look at the stats for my instance online.... (lemmyonline.com) I can assure you, I don't have 30k users and 1.2 million comments.

- There is no built-in "real-time" methods for admins via the UI to identify suspicious activity from their users, I am only able to fetch this data directly from the database. I don't think it is even exposed through the rest api.

What can happen if we don't identify a solution.

We know meta wants to infiltrate the fediverse. We know reddits wants the fediverse to fail.

If, a single user, with limited technical resources can manipulate that content, as was proven above-

What is going to happen when big-corpo wants to swing their fist around?

Edits

- Removed most of the images containing instances. Some of those issues have already been taken care of. As well, I don't want to distract from the ACTUAL problem.

- Cleaned up post.