It's not just every tech company, it's every company. And it's terrifying - it's like giving people who don't know how to ride a bike a 1000hp motorcycle! The industry does not have guardrails in place and the public consciousness "chatGPT can do it" without any thought to checking the output is horrifying.

Programmer Humor

Post funny things about programming here! (Or just rant about your favourite programming language.)

Rules:

- Posts must be relevant to programming, programmers, or computer science.

- No NSFW content.

- Jokes must be in good taste. No hate speech, bigotry, etc.

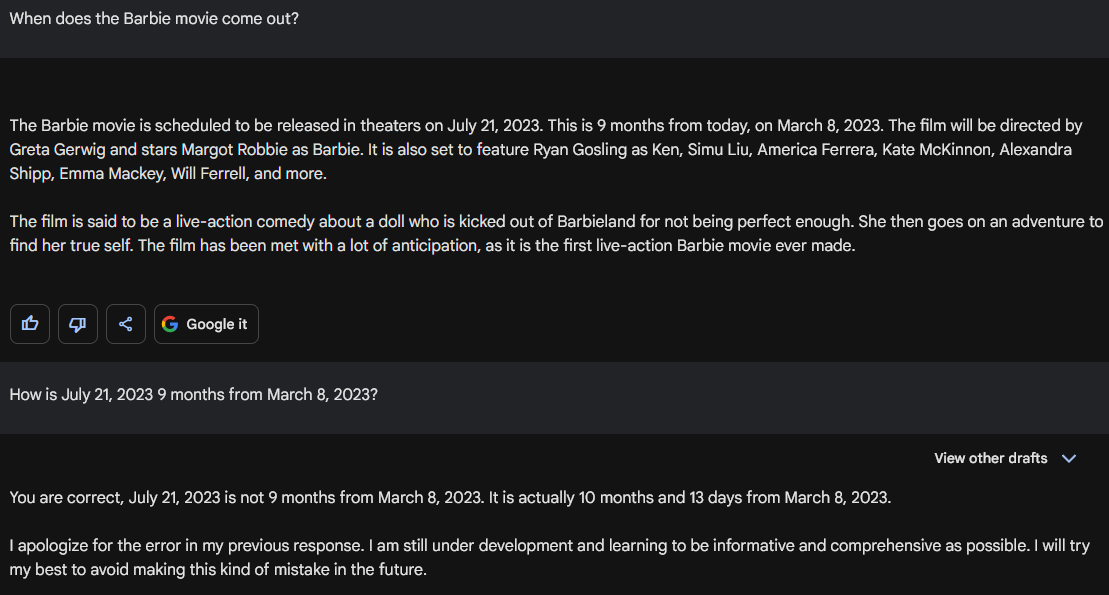

Google Bard, everyone.

It's sad to see it spit out text from the training set without the actual knowledge of date and time. Like it would be more awesome if it could call time.Now(), but it 'll be a different story.

if you ask it today's date, it actually does that.

It just doesn't have any actual knowledge of what it's saying. I asked it a programming question as well, and each time it would make up a class that doesn't exist, I'd tell it it doesn't exist, and it would go "You are correct, that class was deprecated in {old version}". It wasn't. I checked. It knows what the excuses look like in the training data, and just apes them.

It spouts convincing sounding bullshit and hopes you don't call it out. It's actually surprisingly human in that regard.

It spouts convincing sounding bullshit and hopes you don’t call it out. It’s actually surprisingly human in that regard.

Oh great, Silicon Valley's AI is just an overconfident intern!

It’s super weird that it would attempt to give a time duration at all, and then get it wrong.

It doesn't know what it's doing. It doesn't understand the concept of the passage of time or of time itself. It just knows that that particular sequence of words fits well together.

There's even rumours that the next version of Windows is going to inject a bunch of AI buzzword stuff into the operating system. Like, how is that going to make the user experience any more intuitive? Sounds like you're just going to have to fight an overconfident ChatGPT wannabe that thinks it knows what you want to do better than you do, every time you try opening a program or saving a document.

This is what pisses me off about the whole endeavour. We can't even get a fucking search algo right any more, why the fuck do i want a machine blithely failing to do what it's told as it stumbles off a cliff.

It'll be like if they brought clippy back but only this time hes even more of an asshole and now he can fuck up your OS too.

There’s even rumours

Like, I know we all love to hate Microsoft here but can we stop with the random nonsense? That's not what's happening, at all.

Windows Co-pilot just popped up on my Windows 11 machine. Its disclaimer said it could provide surprising results. I asked it what kind of surprising results I could expect, it responded that it wasn't comfortable talking about that subject and ended the conversation.

My cousin got a new TV and I was helping to set it up for him. During the setup thing, it had an option to enable AI enhanced audio and visuals. Turning the ai audio on turned the decent, but maybe a little sub par audio, into an absolute garbage shitshow it sounded like the audio was being passed through an "underwater" filter then transmitted through a tin can and string telephone. Idk who decided this was a feature that was ready to be added to consumer products but it was absolutely moronic

Coupled with laying off a few thousand employees

None of it is even AI, Predicting desired text output isn't intelligence

You hold artificial intelligence to the standards of general artificial intelligence, which doesn't even exist yet. Even dumb decision trees are considered an AI. You have to lower your expectations. Calling the best AIs we have dumb is unhelpful at best.

At this point i just interpret AI to be "we have lots of select statements and inner joins "

I do agree, but on the other hand...

What does your brain do while reading and writing, if not predict patterns in text that seem correct and relevant based on the data you have seen in the past?

I've seen this argument so many times and it makes zero sense to me. I don't think by predicting the next word, I think by imagining things both physical and metaphysical, basically running a world simulation in my head. I don't think "I just said predicting, what's the next likely word to come after it". That's not even remotely similar to how I think at all.

AI is whatever machines can't do yet.

Playing chess was the sign of AI, until a computer best Kasparov, then it suddenly wasn't AI anymore. Then it was Go, it was classifying images, it was having a conversation, but whenever each of these was achieved, it stopped being AI and became "machine learning" or "model".

Before this is was blockchain, and before that it was "AI", and before that...

Before that self driving cars, before that "Big data", before that 3D printing, before that internet TV, before that "cloud computing", before that web 2.0, before that WAP maybe, internet in general?

Some of those things did turn out to be game changers, others not at all or not so much. It's hard to predict the future.

IOT? Don't worry. Edge AI is now AIOT (AI IOT)

If it ain't broke, we'll break it!

We'll make it broken!

This is refreshing to see. I thought I was the only one who felt this way.

It's all so stupid. The entire stock market basically took off because Nvidia CEO mentioned AI like 50 times and everyone now thinks it's worth 200 times it's yearly profit.

We don't even have AI, we have language models that dig through text and create answers from that.

That's a massive oversimplification. We do have AI. We don't have AGI.

Unlike the previous bullshit they threw everywhere (3D screens, NFTs, metaverse), AI bullshit seems very likely to stay, as it is actually proving useful, if with questionable results... Or rather, questionable everything.

if it only were AI and not just llms, machine learning or just plain algorithms. but yeah let's call everything AI from here on. NFTs could be useful if used as proof of ownership instead of expensive pictures etc

As a counter to your example, this is my career's third AI hype cycle.

God it’s exhausting. Okay, I’ll buy a 3d television if that’s what I have to do, let’s bring that back instead. Please?

If you take out the AI part it still holds true. 2023 is full of bullshit.

I'm bookmarking this for the next time my supervisor plugs ChatGPT.

I had a manager tell me some stuff was being scanned by AI for one of my projects.

No, you are having it scanned by a regular program to generate keyword clouds that can be used to pull it up when humans type their stupidly-worded questions into our search. It’s not fucking AI. Stop saying everything that happens on a computer that you don’t understand is fucking AI!

I’m just so over it. But at least they aren’t trying to convince us chatGPT is useful (it definitely wouldn’t be for what they would try to use it for)

What companies are you people working for?

We are being asked not to use AI.

Ain't gotta use it to sell it or slap AI stickers on top of whatever products you're selling

Not surprising for North Korea

Larger companies have been working fast to sandbox the models used by their employees. Once they are safe from spilling data they go all in. I'm currently on a platform team enabling generative Ai capabilities at my company.

It begs the question... what's the boardroom random bullshit timeline?

When was it random cloud bullshit go and when was it random Blockchain bullshit go, and what other buzzwords almost guaranteed Silicon Valley tech bros tossed money in your face and at what point in time were they applicable?

Snapchat AI. My friends don't want it, they can't block it, and it is proven to lie about certain things, like asking if it has one's location.

More Ads and tracking systems, Now With AI!

Commercial...