What a fair an unbiased title this article has. Also wipes away any form of credibility immediately by citing Elon Musk first and foremost. The dude has money, the brains comes from the people he treats like shit in his businesses.

Technology

A nice place to discuss rumors, happenings, innovations, and challenges in the technology sphere. We also welcome discussions on the intersections of technology and society. If it’s technological news or discussion of technology, it probably belongs here.

Remember the overriding ethos on Beehaw: Be(e) Nice. Each user you encounter here is a person, and should be treated with kindness (even if they’re wrong, or use a Linux distro you don’t like). Personal attacks will not be tolerated.

Subcommunities on Beehaw:

This community's icon was made by Aaron Schneider, under the CC-BY-NC-SA 4.0 license.

"AI experts..."

Lists people who are not AI experts

Correct me if I'm wrong, but I thought the big risk with AI is its use as a disinfo tool. Skynet ain't no where near close, but a complete post truth world is possible. It's already bad now... Could you imagine AI generated recordings of crimes that are used as evidence against people? There are already scam callers that use recordings to make people think theyve kidnapped relatives.

I really feel like most people aren't afraid of the right things when it comes to AI.

That's largely what these specialists are talking about. People emphasising the existential apocalypse scenarios when there are more pressing matters. I think purpose of the tools in mind should be more of a concern than the training data as well in many cases. People keep freaking out about LLMs and art models while still ignoring the plague of models built specifically to manipulate and predict subconscious habits and activities of individuals. Models built specifically to recreate the concept of a unique individual and their likeness for financial reason should also be regulated in new unique ways. People shouldn't be able to be bought wholesale, but to sell their likeness as a subscription with rights to withdraw from future production, etc.

I think the ways we think about a lot of things have to change based around the type of society we want. I vote getting away from a system that lets a few own everything until people no longer have the right to live.

Indeed, because the AI just makes shit up.

That was the problem with the lawyer who brought bullshit ChatGPT cases into court.

Hell, last week I did a search for last year's Super Bowl and learned that Patrick Mahomes apparently won it by kicking a game-winning field goal.

Disinfo is a huge, huge problem with these half-baked AI tools.

Isn't this already possible though? Granted, AI can do this exponentially faster, write the article generate deepfakes and then publish or whatever. But... Again, can't just regular people already do this if they want? I mean, with the obvious aside, it's not AI that are generating deepfakes of politicians and celebrities, it's people using the tool.

It's been said already, but AI as a tool can be abused just like anything else. It's not AI that is unethical (necessarily), it is humans that use it unethically.

I dunno. I guess I just think about the early internet and the amount of shareware and forwards-from-grandma (if you read this letter you have 5 seconds to share it, early 2000's type stuff) and how it's evolved into text to speech DIY crafts. AI is just the next step that we were already headed down. We were doing all this without AI already, it's just so much more accessible now (which IMO, is the only way for AI to truly be used for good. Either it's 100% accessible for all or it's hoarded away.)

This also means that there are going to be people who use it for shitty reasons. These are the same types of people for why we have signs and laws in the first place.

It seems to come down to do we let something that can do harm be used despite it? I think there's levels, but I think the potential for good is just as high as the potential for disaster. It seems wrong to stop the use of AI possibly finding cures for cancer and genetic sequencing for other ailments just because some creeps can use it for deepfakes. Otherwise, the deepfakes would still have existed without AI and we would be without any of the benefits that AI could give us.

Note: for as interested and hopeful as I am for AI as a tool, I also try to be very aware of how harmful it could be. But most ways I look at it, somehow people like you and I using AI in various ways for personal projects, good or bad, just seems inconcequntial compared to the sheer speed with which AI can create. Be it code, assets, images, text, puzzles and patterns, we have one of our first major technological advancements and half of us are arguing over who gets to use it and why they shouldn't.

Last little tidbit: think about AI art/concepts you've seen in the last year. Gollum as playing cards, teenage mutant ninja turtles as celebs, deepfakes, whathaveyou. Think about the last time you saw AI art. Do you feel as awed/impressed/annoyed by the AI art of last year to the AI art of yesterday? Probably not, you probably saw it, thought AI, and moved on.

I've got a social hypothesis that this is what deepfake generations are going to be like. It doesn't matter what images get created because a thousand other creeps had the same idea and posted the same thing. At a certain point the desensitization onsets and it becomes redundant. So just because this can happen slightly more easily, we are going to sideline all of the rest of the good the tool can do?

Don't get me wrong, I don't disagree by any means. It's an interesting place to be stuck, I'm just personally hoping the solution is pro-consumer. I really think a version of current AI could be a massive gain to people's daily lives, even if it's just for hobbies and mild productivity. But it only works if everyone gets it.

There’s a huuuge gap between evil robot overlords and chatGPT-like stuff tho. LLMs are not taking over the world anytime soon.

I don't know why I previous post comments didn't show up in my profile. But it's very simple fact that: A regular adult human being have 600 trillion synapses(connections between neurons), it would need to use 64bit int for indexing theses connection and it would cost you ~4.8PB to index these connections. A toddler have way more connections before we trim the unnecessary ones when we get older. It's freaking impossible for our modern distributed computing to do this efficiently(imagine DDoS your own networks by sending those astronomical amount of data around the section of your virtual brain.)

We do have a very limited scale simulation projects(for medical research etc), and yeah, it's not get smarter or anything, imagine how stupid regular human can be.

edit: I know you can subsection and index those connections with cluster hierarchy, it would cut some cost but the overhead is also introduced. if the overhead/search time etc is more than the cost of using 64bit index, then using 64bit index is "cheaper".(trade datasize for look up efficiency.)

Something you may not have considered is that the majority of our brains are used for things like sensory input and motor control, not for thinking. This is why brain size relative to body size is so important. A whale has a far larger brain than you or I, but is significantly less intelligent.

@fearout @throws_lemy There's a huge gap between South Korea and North Korea too. But just this week, some mentally distressed individual worked his way up to that gap and then scrambled across it, in violation of his own best interests. Whenever it becomes possible to cross a gap, it'll get crossed.

This analogy is not even remotely applicable.

I hate this skynet discourse, like no fucking shit LLMs aren’t skynet, they’re not even AI. Acting like that’s a legitimate criticism that needs to be discussed is blatantly just an attempt to distract from the real issues and criticism.

There are real issues surrounding how these models are trained, how the data for the models is selected and who gets compensated for that data, let alone the discussions around companies using these tools to devalue skilled individuals and cut their pay.

But they don’t have to engage with any of that because they get to debate the merits of wether or not the shitty sitcom script autocomplete program will launch nukes or make paper clips out of people.

People are wildly overestimating the power and applicability of LLMs. I get that it’s scary to have a conversation with a computer, but we are still just as far from artificial general intelligence as we have always been.

People also have that tendency to personify AIs. I don't really understand why.

I think people just kinda like to personify things, it happens all the time even with things that aren’t even close to human

Reminds me community names a pencil Steve and the snaps it, people can get attached to anything really. ie. pet rock

Personification is a very normal and natural human thing. Very likely an evolutionary adaptation. We see ourselves in nearly everything as humans.

Evil robot overlords aren't the problem. Evil human overlords replacing human laborers with robots, and then exterminating the human laborers, is the problem.

It's not AI but the system making use of it which has been, is and will continue to be the problem.

Give a group of capitalists a single stick and they might hit one another to own it. Give them a single nuke and..?

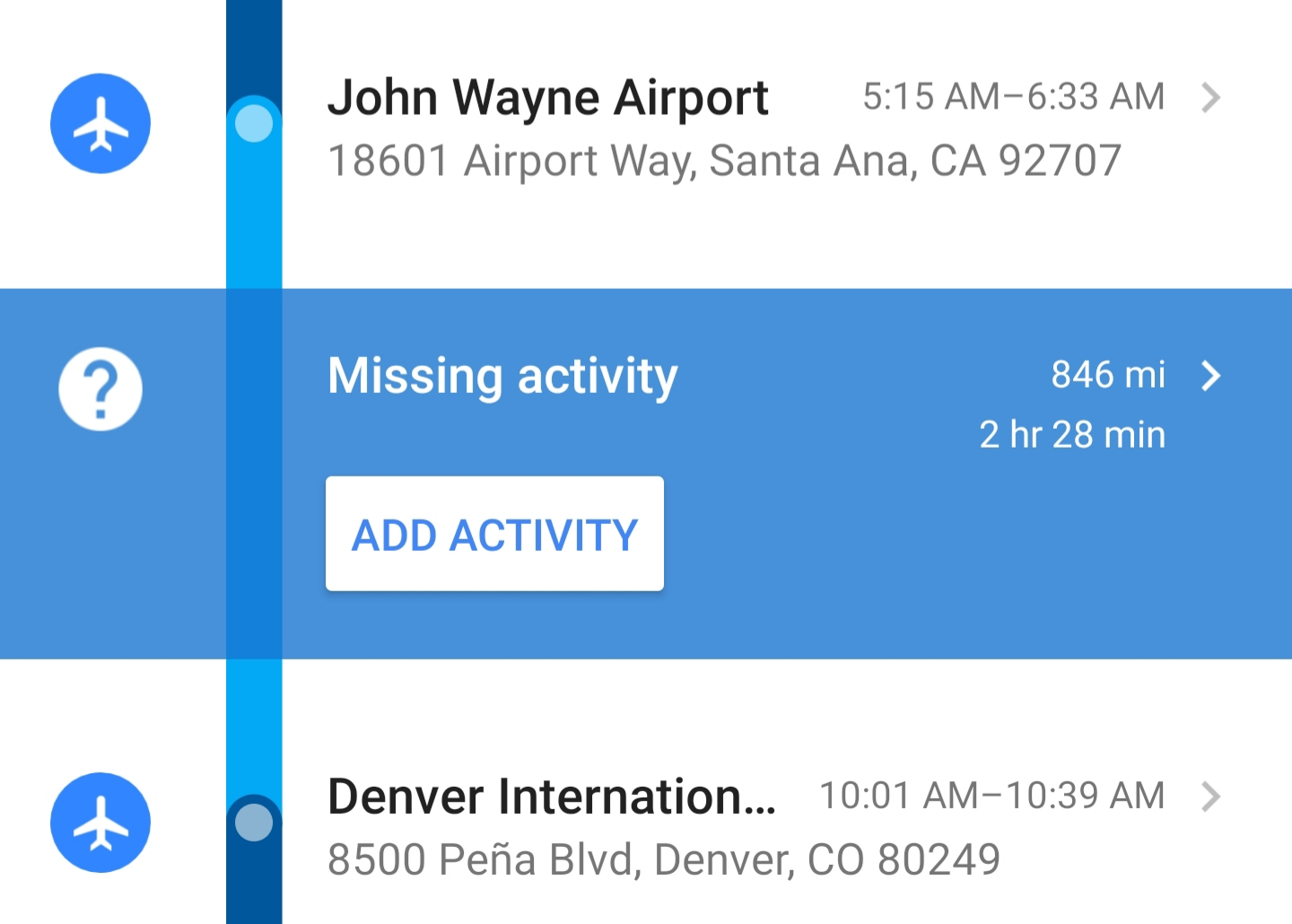

Every time I hear people worried about the robot uprising, I remember the time Google Location couldn't figure out what method of transportation I was using for 2 and a half hours between the Santa Ana, CA airport and the Denver, CO airport.

Silly Google! Obviously you skateboarded, doing plenty of gnarly tricks on the way.

It honestly makes my blood boil when I read "AI will destroy everything" or "kill everyone" or similar. No, its not going to happen. We are nowhere close to that, we never will unintentionally or intentionally create unkillable beings like Terminator just because there is actually no material to build it with to make it par.

Big tech wanting to freeze AI just want to control it themself. Full speed ahead!

@SSUPII @throws_lemy @technology concur.

Skynet is a red herring.

The real issue is that #AI is putting more stress on long-standing problems we haven’t solved well. Good opportunity to think carefully about how we want to distribute the costs and benefits of knowledge work in our society.

👉🏼“AI stole my book/art” is not that different from “show me in the search results, but only enough that people click through to my page”

👉🏼 “AI is taking all the jobs” is not that different from “you outsourced all the jobs overseas”

👉🏼 “the AI lied to me!” is not that different from “that twitter handle lied about me!”

The main difference is scale, speed and cost. Things continue to speed up, social norms and regulations fall behind faster. #ai

AI scraping and stealing people's art is literally nothing like a search engine.

Maybe that would hold up if the original artist was paid and credited/linked to, but right now there is literally zero upside to having your artwork stolen by big tech.

@donuts would you please share your thinking?

I certainly agree that you can see the current wave of Generative AI development as “scraping and stealing people’s art.” But it’s not clear to me why crawling the web and publishing the work as a model is more problematic than publishing crawl results through a search engine.

Search engines are rightly considered fair use because they provide a mutual benefit to both the people who are looking for "content" and the people who create that same "content". They help people find stuff, which basically is good for everyone.

On the other hand, artists derive zero benefit from having their art scraped by big tech companies. They aren't paid licensing fees (they should be), they aren't credited (they should be), and their original content is not visible or being advertised in any way. To me, it's simply exploitation right now, and I hope that things can change in the future so that it can benefit everyone, artists included.

For example, image search has been contentious for very similar reasons.

- You post a picture online for people to see, and host some adds to make some money when people look at it.

- Then Google starts showing the picture in image search results.

- People view the image on Google and never visit your site or click on your ads. Worst case, google hot links it and you incur increased hosting costs with zero extra ad revenue

I certainly think that a Generative AI model is a more significant harm to the artist, because it impacts future, novel work in addition to already-published work.

However in both cases the key issue is a lack of clear & enforceable licensing on the published image. We retreat to asking “is this fair use?” and watching for new Library of Congress guidance. We should do better.

I don't think it's naive to think that there is an existential risk from AI. Yes, LLMs are not sentient and I don't think ChatGPT is Skynet, but it seems pretty obvious to me that if we could create an entity that is generally intelligent, has goals, and is orders of magnitude smarter than humans that is cannot be controlled. And that poses a risk if its interests come into conflict with ours

Global mega-corps are already a kind of AI. They're generally intelligent actors that find creative solutions to problems and work to advance their own interests, often at the expense of humanity, despite attempts to reign them in. A true AGI might be like a super-powered corporation, that can have it's big decision meetings every second instead of once a week

However, I am also concerned with global regulation of this stuff because if state actors are the only ones allowed to have AI, that could get dystopian very quickly as well so... 🤷♂️

It makes my blood boil when people dismiss the risks of ASI without any notable counterargument. Do you honestly think something a billion times smarter than a human would struggle to kill us all if it decided it wanted to? Why would it need a terminator to do it? A virus would be far easier. And who's to say how quickly AI will advance now that AI is directly assisting progress? How can you possibly have any certainty on any timelines or risks at all?

Put down the crack, there is a huge ass leap between general intelligence and the LLM of the week.

Next you're going to tell me cleverbot is going to launch nukes. We are still incredibly far from general intelligence ai.

I never said how long I expected it to take, how do you know we even disagree there? But like, is 50 years a long time to you? Personally anything less than 100 would be insanely quick. The key point is I don't have a high certainty on my estimates. Sure, might be perfectly reasonable it takes more than 50, but what chance is there it's even quicker? 0.01%? To me that's a scary high number! Would you be happy having someone roll a dice with a 1 in 10000 chance of killing everyone? How low is enough?

But like, is 50 years a long time to you

I'll be dead.

but what chance is there it's even quicker? 0.01%? To me that's a scary high number! Would you be happy having someone roll a dice with a 1 in 10000 chance of killing everyone? How low is enough?

The odds are higher that Russia nukes your living area.

Well I wont be, and just because one thing might be higher probability than another, doesn't mean it's the only thing worth worrying about.

The likelihood of an orca coming out of your asshole is just as likely as a meteor coming from space and blowing up your home.

Both could happen, but are you going to shape your life around whether or not they occur?

Your concern of AI should absolutely be pitted towards the governments and people using it. AI, especially in its current form, will not be doing a human culling.

Humans do that well enough already.

And how can you be certain, if the virus generation its an actual possibility, there won't be another AI already made to combat such viruses?

I mean, even that hypothetical scenario is very scary, though lol

Sure, I'm not certain at all, maybe, but are you certain enough to bet your life on it?

I'm no tech guy, but in my understanding, AI is just a tool, that in our capitalist nightmare of a world only amplifies opression and profit oriented practices over people and the planet but it's nowhere near being sentient or actually thinking

I'm all for ai being used for good.

I'm also for splitting the atom being used for good....

Exactly. It's almost impossible to imagine anything about AI being 'good' because so little of any real substance is permitted to work for 'good'.

It'll work for profit, just like everything else.

"including business founders, CEOs" - Oh, well, that's ok then. There was nothing to worry about, after all.

That “if” is doing an awful lot of heavy lifting.

AI will be used to replace human labor, after which the vast majority of humanity will be exterminated. Not by the AI itself, that is, but by its wealthy human owners. That's my prediction.

Now that the AI's quality is rapidly degrading, I'm significantly less worried about glorified auto-correct taking over the planet.

I'm out of the loop, how exactly are they getting worse?

ChatGPT'S math response quality has declined (at least in certain areas).

I am by no means an AI expert, but I would imagine this decrease in quality coming from one of three things:

- Super fast development increasing ChatGPT's dataset, but decreasing its reliability.

- ChatGPT is far more censored than it was before, and this could have hurt the system's reliability.

- Maybe large-scale, mature large language models just dont scale well when exposed to certain kinds of inputs.

If the answer is one of the first two, I would expect and uncensored, open source LLM to overtake ChatGPT in the future.

To be fair, SkyNet was created for good, as well.

Well it’s a good thing the AI experts found a way to protect their jobs.

Exactly how it always starts in dystopian fiction.