This was common advice for parents in the 80s and 90s. If someone had to pick me up from school unexpectedly my parents gave them a code word to tell me to let me know it wasn't a child abduction

Technology

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related content.

- Be excellent to each another!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, to ask if your bot can be added please contact us.

- Check for duplicates before posting, duplicates may be removed

Approved Bots

Right now deepfakes doesn't work well when the face is viewed from extreme angles, so you can ask them to slowly turn their face to the side or up/down as far as they can until the face is not visible. It also doesn't work well when something obstruct the face, so ask them to put their hand in their face. It also can't seem to render mouth right if you open it too wide, or stick out your tongue.

I base this from a deepfake app I tried: https://github.com/s0md3v/roop . But as the tech improves, it might be able to handle those cases in the future.

Edit: chance that the scammer use a live deepfake app like this one: https://github.com/iperov/DeepFaceLive . It also supports using the Insight model which only need a single well lit photo to impersonate someone.

Right now deepfakes doesn’t work well when the face is viewed from extreme angles, so you can ask them to slowly turn their face to the side or up/down as far as they can until the face is not visible.

or, you know, you can just pickup the phone and call them.

You might not be aware of it, but in India (and SEA), using whatsapp video call is a lot more common than calling using your carrier's phone service. No one would think twice when receiving a whatsapp video calls there.

i am not aware of that, no, but my point is not that the video call itself is suspicious. it is that if you have have a suspicion for whatever reason, normal cell call for a verification is far easier than doing some strange gymnastics the person above suggested (which may or may not work).

I guess that also allows for some 'benefit of the doubt' from the point of view of the victim, it's probably harder to spot artifacts that would be obvious on a TV or monitor screen when the image is v small, and any glitches could be due to the video stream / compression

I had this attack tried on me. It was a video call from my friend's Facebook account. If I didn't know enough to be suspicious, I wouldn't have answered. Luckily I have that friend on Signal, so I knew they wouldn't have called me on Facebook asking for money. I tried calling on Signal, but they didn't answer. They must've not had their phone on them. Calling their home phone worked, though, which is kind of a weird thought.

Remember, if it’s truly life threatening, the hospital is going to do the surgery and gouge you for it later.

The time pressure is meant to prevent you from looking into it.

Hang up, call them…. Don’t just hand money over the phone.use an excuse like calling your bank or something

Fortunately, I hate videocalls and have no reason to use them, so if my friend videocalled me I'd ask what the fuck they were doing and immediately be suspicious.

Especially if they were suddenly asking you for money.

My first thought would be "wtf? How did WhatsApp get installed" followed by throwing my phone in a lake.

I'm in the US and have a well off friend who had his Facebook hacked. The bad actors sent messages to his friends asking to borrow $500 until tomorrow because his bank accounts were locked and he needed the cash. Someone who was messaged by the bad actors posted a screenshot of a deepfaked video call he received that caused him to fall for it. Wild times we live in!

40,000 Indian rupees = $487 USD.

Wow that’s a cheap surgery! Definitely not US.

Yeah them paying in rupees might have been a hint to where it happened.

No. This is how you avoid the problem.

"Lemme call you back in 5 because "

If you'd lend them money you'll have their contact info. Go get a different phone and call them.

You're not wrong but it's going to take a long time for "that relative that is calling could be someone else" to be something that people actually think about. Simple to execute your solution but 99% of the people out there won't even consider the possibility.

"HI we are chased bank and we sent you 40k please give us the codes to Amazon gift cards to pay it back" still works on the elderly. This trick is going to wreak havok among old people.

Guy who scammed his friend out of $500: oh, no it totally wasn't me man. There was a video? Weird it must have been a Randeep Fake

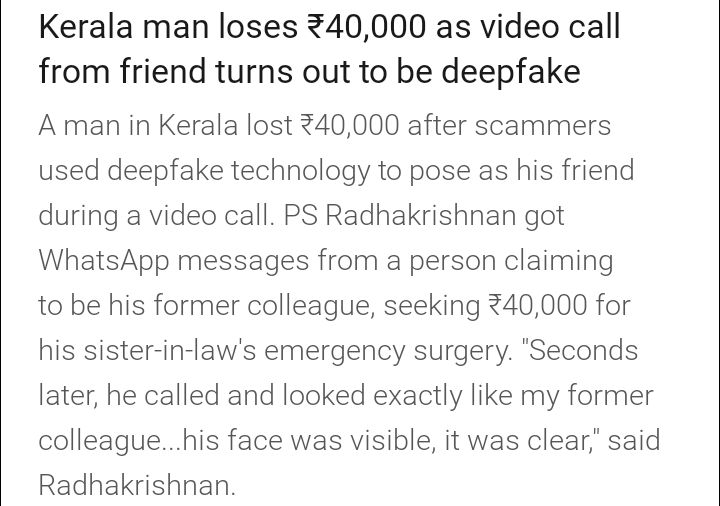

Source on the image? Seems to be a snippet of a longer article.

EDIT: looking up the text of the image gives me https://inshorts.com/m/en/news/kerala-man-loses-%E2%82%B940000-as-video-call-from-friend-turns-out-to-be-deepfake-1689663557129, which is just the snipped text, but points at

as the source. I get images get more engagement than links, but it's important to have the source handy.

I was so hoping the crappy "hey, a text thing I want to share, let me take a fucking attributionless accessibility-poisoning screenshot and upload it like a psychopath instead of just copy/pasting the link to the text or the text itself like a decent human being" routine would die with Reddit. We should be better than that here.

I even get why, images inherently get more eyes on them than articles through links, but the least we can do is include the source in the post body.

Isn't that what we've already been using gpg for? More communication sites should implement it

Is it a user problem or platform problem that more services don't implement some sort of OpenPGP solution? I mean to say, I absolutely agree this is a good idea, but is the obstacle the users or the services? I can see people getting really confused and not knowing to treat their private keys properly, etc. So are services afraid it'll drive users away or are services afraid of it for some other reason?

I feel like it's kind of a mix of both. It's definitely a hassle to use and check as a user, but I think part of the reason it is is because sites just treat it as an extra thing rather than integrate it into their service

Don't deep fakes always look a little clunky?

Yeah until they don't anymore lol.

They could blame a bad connection.

Jokes on you scammers. Can't deepfake me with a friend's face if I don't got any friends to deepfake.

I got one of these a few months ago. I could tell it was fake before I even answered, but I was curious so I pointed my own camera at a blank wall and answered. It was creepy to see my friend's face (albeit one that was obviously fake if you knew what to look for) when I answered.

"Hey, what's wrong with Woofie?"

Your friend is dead

What's my kid code?

Easy solution: Never give money that’s requested like this. Give the money in person or not at all.

If the friend doesn’t like it they can go to the bank. If they don’t like my terms they can pay interest to them.

Sorry people, I’m not your fuckin loan officer and scams are just too easy.

Here’s hoping for popularising secure communication protocols. It’s gonna become a must at some point.

Gr. It's not the technology that pisses me off. It's people forgetting the fundamental rule that everything on the internet is fake until definitively proven otherwise.

Even after proven, nothing digital should ever rise to 100% trust. Under any circumstances whatsoever. 99% is fine. 100% is never.

Hell, even real life inputs from your eyes don't get 100% trust. People are well aware their eyes can play tricks. But somehow go digital and people start trusting, even though digital is easier to corrupt than irl information in every possible way.

I think it's pretty unreasonable to expect someone in 2023 to not trust a video call from someone they know. We are entering that period now, but I could have easily been fooled the same way. I bet you could have too.

Perhaps its because I pre-date most internet technology, but I am extremely distrustful in all digital spaces. Everyone should've started being extremely distrustful years ago, if they weren't already. Not today.

You don't wait for a big problem to smack you in the face. That's how you lose 40k like our elderly friend. You just get to be in the first wave of potential victims that way.

I grew up before the internet myself. I can't say I'm on high alert for fake video calls lol I will be moving forward, however, now that it's a credible threat.

"Hello dear, it's your mother. Haven't heard from you in a while."

"Nice try scammer, go to hell!!"