this post was submitted on 14 Jun 2024

667 points (92.9% liked)

Programmer Humor

19594 readers

985 users here now

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

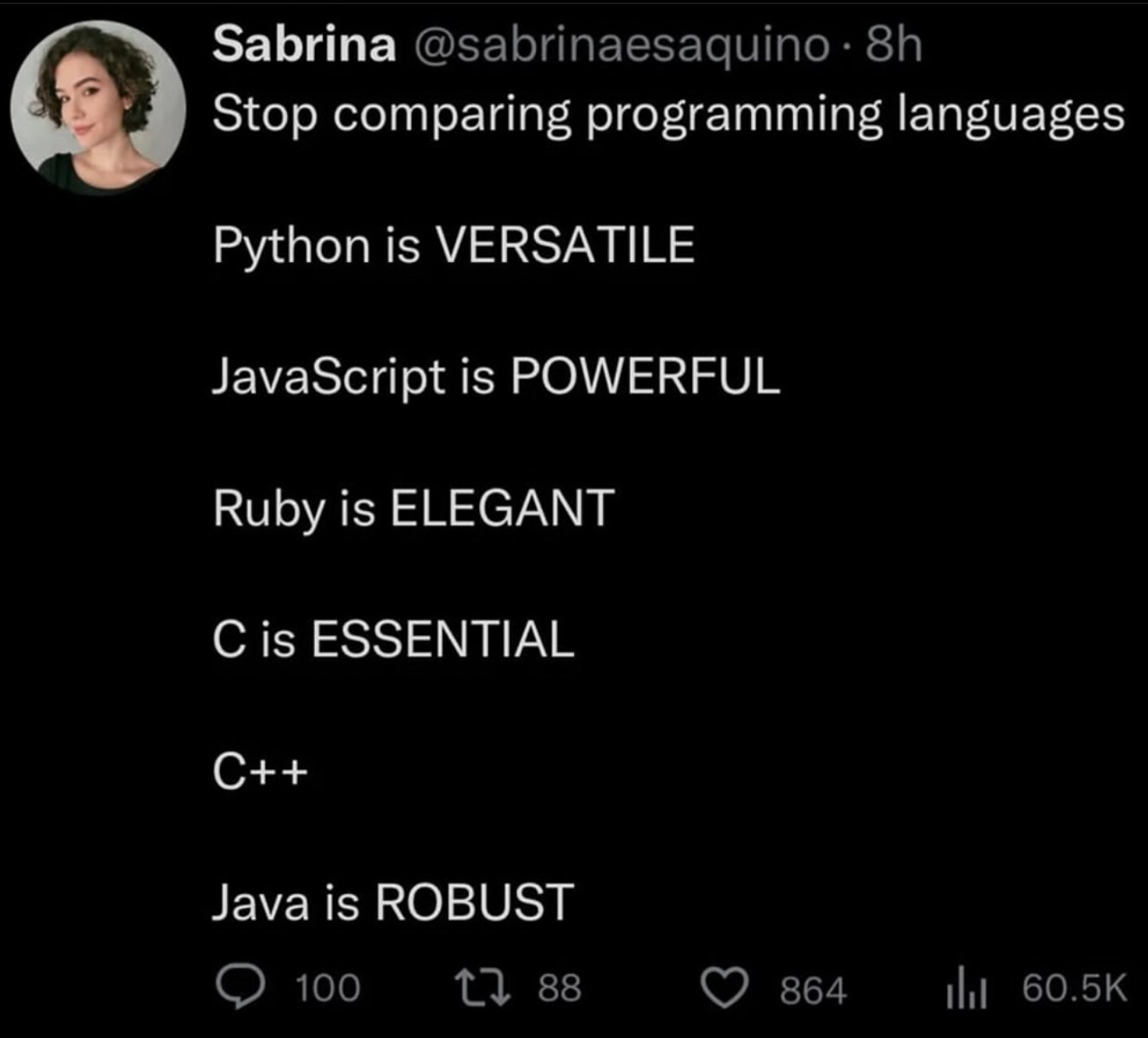

exposing the machinations of the underlying CPU with no regard for safety is like, the definition of a footgun

Okay, but how do you code on a CPU without directly interfacing the CPU at some point? Python and JavaScript both rely on things written in mid-level languages. There's a difference between a bad tool and one that just has limitations inherent to the technology.

Like, to echo the meme a bit, it's not a totally straight comparison. They have different roles.

a footgun isn't inherently bad, it just implies a significant amount of risk

yes, if you need the ability to code on a low level, maybe C is necessary, but the times where that is actually necessary is smol

also rust

Yes, also Rust. It wasn't an option until recently though.

The times when C or C++ is worth it definitely isn't always, but I'm not sure I'd class much of OS programming and all embedded and high-performance computing as small. If you have actual hard data about how big those applications are relative to others, I'd be interested.

Also, it's a nitpick, but I'd personally say a footgun has to be unforeseeable, like literal shoe guns being added to a video game where guns were previously always visible. Once you understand pointers C is reasonably consistent, just hard and human-error-prone. The quirks follow from the general concepts the language is built on.

There were memory-safe languages long before C was invented, though; C was widely considered "dangerous" even at the time.

True, but AFAIK they all sucked really bad. If you needed to make something that preformed back then you wrote in assembly.

FORTRAN might be a good counterexample. It's pretty fast, and I'm not actually sure if it's memory safe; it might be. But, it's definitely very painful to work with, having had the displeasure.

That's pure assumption and, as far as I can tell, not actually true. PASCAL was a strong contender. No language was competitive with handwritten assembly for several decades after C's invention, and there's no fundamental reason why PASCAL couldn't benefit from intense compiler optimizations just as C has.

Here are some papers from before C "won", a more recent article about how PASCAL "lost", and a forum thread about what using PASCAL was actually like. None of them indicate a strong performance advantage for C.

Hmm, that's really interesting. I went down a bit of a rabbit hole.

One thing you might not know is that the Soviets had their own, actually older version of C, the Адресный programming language, which also had pointers and higher-order pointers, and probably was memory-unsafe as a result (though even with some Russian, I can't find anything conclusive). The thing I eventually ran into is that Pascal itself has pointer arithmetic, and so is vulnerable to the same kinds of errors. Maybe it was better than C, which is fascinating, but not that much better.

Off-topic, that Springer paper was also pretty neat, just because it sheds light on how people thought about programming in 1979. For example:

I don't see a lot of people denying that 2 is a good metric today. In fact, in the rare exceptions where someone has come right out and said it, I've suspected JS Stockholm syndrome was involved. Murphy's law is very real when you not only have to write code, but debug and maintain it for decades as a large team, possibly with significant turnover. Early on they were still innocent of that, and so this almost reads like something a non-CS acedemic would write about programming.

Indeed, I had no idea there are multiple languages referred to as "APL".

I feel like most people defending C++ resort to "people shouldn't use those features that way". 😅

As far as I can tell, pointer arithmetic was not originally part of PASCAL; it's just included as an extension in many implementations, but not all. Delphi, the most common modern dialect, only has optional pointer arithmetic, and only in certain regions of the code, kind of like

unsafein Rust. There are also optional bounds checks in many (possibly most) dialects. And in any case, there are other ways in which C is unsafe.And yeah, I'm with you, that's a shit argument. A language is a tool, it exists to make the task easier. If it makes it harder by leading you into situations that introduce subtle bugs, that's not a good tool. Or at least, worse than an otherwise similar one that wouldn't.

Without a super-detailed knowledge of the history and the alternative languages to go off of, my suspicion is that being unsafe is intrinsic to making a powerful mid-level language. Rust itself doesn't solve the problem exactly, but does control flow analysis to prove memory safety in (restricted cases of) an otherwise unsafe situation. Every other language I'm aware of either has some form of a garbage collector at runtime or potential memory issues.

once you understand C++ the pitfalls of C++ are reasonably consistent

there are like what, 3 operating systems these days?

assume those are all written entirely in c and combine them and compare that to all code ever written

All of C++? That's unreasonable, it's even in the name that it's very expansive. Yes, if you already know a thing, you won't be surprised by it, that's a tautology.

C is more than just pointers, obviously, but the vast majority of the difficulty there is pointers.

Plus all previous operating systems, all supercomputer climate, physics and other science simulations, all the toaster and car and so on chips using bespoke operating systems because Linux won't fit, every computer solving practical engineering or logistics problems numerically, renderers...

Basically, if your computational resources don't vastly exceed the task to be done, C, Rust and friends are a good choice. If they do use whatever is easy to not fuck up, so maybe Python or Haskell.

similarly, "all of pointers" is unreasonable

"all of pointers" can have a lot of unexpected results

that's literally why java exists as a language, and is so popular

sure, and the quantity of code where true low-level access is actually required is still absolutely minuscule compared to that where it isn't

How? They go where they point, or to NULL, and can be moved by arithmetic. If you move them where they shouldn't go, bad things happen. If you deference NULL, bad things happen. That's it.

If you need to address physical memory or something, that's a small subset of this for sure. It also just lacks the overhead other languages introduce, though. Climate simulations could be in Java or Haskell, but usually aren't AFIAK.

what part of that is explicit to how

scanfworks?I suppose if you treat scanf as a blackbox, then yeah, that would be confusing. If you know that it's copying information into the buffer you gave it, obviously you cant fit more data into it than it's sized for, and so the pointer must be wandering out of range.

Maybe C would be better without stdlib, in that sense. Like, obviously it would be harder to use, but you couldn't possibly be surprised by a library function's lack of safeness if there were none.

yeah i mean if you grok the underlying workings of

scanfthen there's no problemi'd just argue that the problem is understanding what you need to understand is the problem with straight c, and with any language like c++ where you're liable to shoot thineself in thy foot

I'm wondering now how much you could add without introducing any footguns. I'd guess quite a bit, but I can't really prove it. Smart pointers, at least, seem like the kind of thing that inevitably will have a catch, but you could read in and process text from a file more safely than that, just by implementing some kind of error handling.