this post was submitted on 24 Feb 2024

791 points (97.0% liked)

Programmer Humor

20673 readers

2318 users here now

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

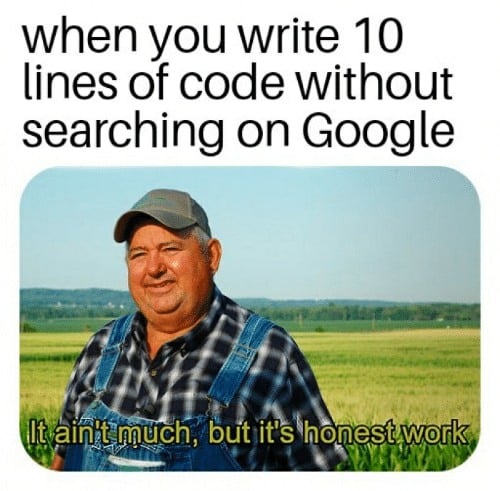

Me running an LLM at home:

The same image, but the farmer is standing in front of a field of poppy (for opioid production)

I am researching doing the same, but know nothing about running my own yet. Did you train your llm for programming in any way, or just download and run an open source one? If so which model etc do you use?

Run an open source one. Training requires lots of knowledge and even more hardware resources/time. Fine tuned models are available for free online, there is not much use in training it yourself.

Options are

https://github.com/oobabooga/text-generation-webui

https://github.com/Mozilla-Ocho/llamafile

https://github.com/ggerganov/llama.cpp

I recommend llavafiles, as this is the easiest option to run. The GitHub has all the stuff you need in the "quick start" section.

Though the default is a bit restricted on windows. Since the llavafiles are bundling the LLM weights with the executable and Windows has a 4GB limit on executables you're restricted to very small models. Workarounds are available though!

Im gonna give llamafile a go! I want to try to run it at least once with a different set of weights just to see it work and also see different weights handle the same inputs.

The reason I am asking about training is because of my work where fine tuning our own is going to come knocking soon, so I want to stay a bit ahead of the curve. Even though it already feels like I am late to the party.

Have a look at llama file models they're pretty cool, just rename to xxx.exe and run on windows and chmod on Linux.

Though the currently supported ones are limited, you could try llama code.

Where do you get it? Hugging face?

https://llamafile.ai (though it's down for the moment)

https://github.com/Mozilla-Ocho/llamafile

Lot's of technical details, but essentially the llamafile is a engine + model + web ui, in a single executable file. You just download it and run it and stuff happens.

Thanks!