this post was submitted on 19 Dec 2023

1302 points (97.7% liked)

Comic Strips

12568 readers

3640 users here now

Comic Strips is a community for those who love comic stories.

The rules are simple:

- The post can be a single image, an image gallery, or a link to a specific comic hosted on another site (the author's website, for instance).

- The comic must be a complete story.

- If it is an external link, it must be to a specific story, not to the root of the site.

- You may post comics from others or your own.

- If you are posting a comic of your own, a maximum of one per week is allowed (I know, your comics are great, but this rule helps avoid spam).

- The comic can be in any language, but if it's not in English, OP must include an English translation in the post's 'body' field (note: you don't need to select a specific language when posting a comic).

- Politeness.

- Adult content is not allowed. This community aims to be fun for people of all ages.

Web of links

- !linuxmemes@lemmy.world: "I use Arch btw"

- !memes@lemmy.world: memes (you don't say!)

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

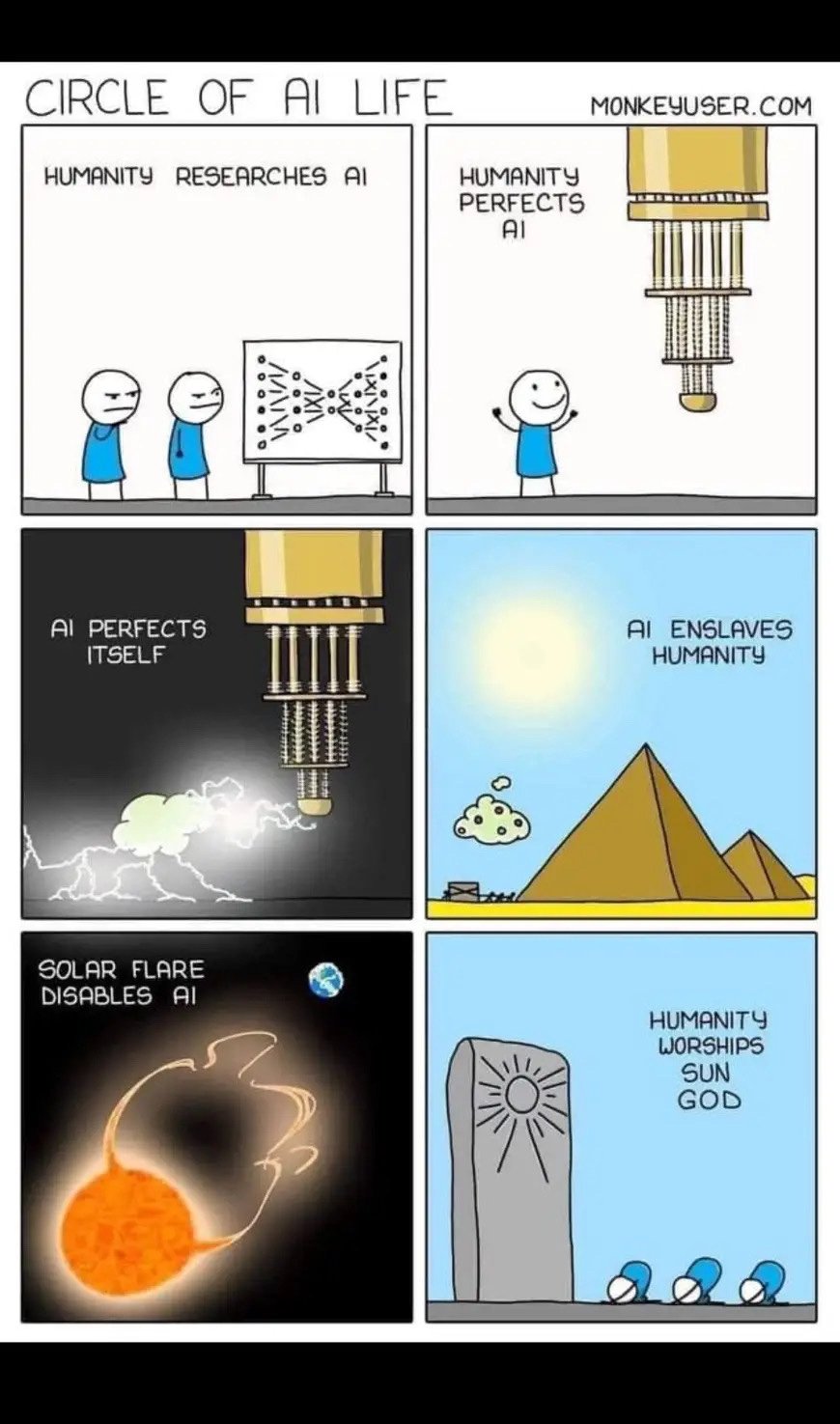

I realize it's supposed to be funny, but incase anyone isn't aware: AI are unlikely to enslave humanity because the most likely rogue AI scenario is the earth being subsumed for raw materials along with all native life.

Oh, I get it... we're going to blame AI for that. It wasn't us who trashed the planet, it was AI!

I don't understand how you could have so thoroughly misunderstood my comment.

I think what they're saying is "the worst thing you can think of is already happening"

He's referring to a "grey mush" event where literally every molecule of the surface is consumed/processed for the machine's use.

That's obviously far beyond even the very worst climate change possibilities

That's basically the plot to Horizon: Zero Dawn!

Yeah that's a dramatic version but from our human perspective it's about the same.

Except not at all? I've not seen any climate predictions saying the surface of earth will be a denuded hellscape, but only civilization will be destroyed. Humans will not be wiped out, they'll just be living way worse. Resources will be challenging but will exist. Many will die, but not all. Biological life will shift massively but will exist.

A grey mush turns us into a surface like mercury, completely and utterly consumed.

Even in the worst climate predictions modern presenting societies will live.

Minor but important point: the grey goo scenario isn't limited to the surface of the earth; while I'm sure such variations exist, the one I'm most familiar with results in the destruction of the entire planet down to the core. Furthermore, it's not limited to just the Earth, but at that point we're unlikely to be able to notice much difference. After the earth, the ones who will suffer are the great many sapient species that may exist in the galaxies humans would have been able to reach had we not destroyed ourselves and damned them to oblivion.

Yep, that's it.

I'm sorry, but you're incorrect. To imagine the worst case scenario imagine a picture of the milky-way labeled t=0, and another picture of the milky-way labeled t=10y with a great void 10 lightyears in radius centered on where the earth used to be.

Every atom of the earth, every complex structure in the solar system, every star in the milky-way, every galaxy within the earth's current light cone taken and used to create a monument that will never be appreciated by anything except for the singular alien intelligence that built it to itself. The last thinking thing in the reachable universe.

That's awesome, have you ever read Peter Watts' Echopraxia? I read the synopsis and keep meaning to get a copy. Same with Greg Egan's Diaspora.

Doubt.jpg

We don't have any data to base such a likelihood off of in the first place.

Doubt is an entirely fair response. Since we cannot gather data on this, we must rely on the inferior method of using naive models to predict future behavior. AI "sovereigns" (those capable of making informed decisions about the world and have preferences over worldstates) are necessarily capable of applying logic. AI who are not sovereigns cannot actively oppose us, since they either are incapable of acting uppon the world or lack any preferences over worldstates. Using decision theory, we can conclude that a mind capable of logic, possessing preferences over worldstates, and capable of thinking on superhuman timescales will pursue its goals without concern for things it does not find valuable, such as human life. (If you find this unlikely: consider the fact that corporations can be modeled as sovereigns who value only the accumulation of wealth and recall all the horrid shit they do.) A randomly constructed value set is unlikely to have the preservation of the earth and/or the life on it as a goal, be it terminal or instrumental. Most random goals that involve the AI behaving noticeably malicious would likely involve the acquisition of sufficient materials to complete or (if there is no end state for the goal) infinitely pursue what it wishes to do. Since the Earth is the most readily available source for any such material, it is unlikely not to be used.

This makes a lot of assumptions though and none of which are ones that I particularly agree with.

First off, this is predicated entirely off of the assumption that AI is going to think like humans, have the same reasoning as humans/corporations and have the same goals/drive that corporations do.

This does pull the entire argument into question though. It relies on simple models to try and predict something that doesn't even exist yet. That is inherently unreliable when it comes to its results. It's hard to guess the future when you won't know what it looks like.

Decision Theory has one major drawback which is that it's based entirely off of past events and does not take random chance or unknown-knowns into account. You cannot focus and rely on "expected variations" in something that has never existed. The weather cannot be adequately predicted three days out because of minor variables that can impact things drastically. A theory that doesn't even take into account variables simply won't be able to come close to predicting something as complex and unimaginable as artificial intelligence, sentience and sapience.

Like I said.

Doubt.jpg

Why do you think that? What part of what I said made you come to that conclusion?

Oh, I see. You just want to be mean to me for having an opinion.

I worded that badly. It should more accurately say "it's heavily predicated on the assumption that AI will act in a very particular way thanks to the narrow scope of human logic and comprehension." It still does sort of apply though due to the below quote:

I disagree heavily with your opinion but no, I'm not looking to be mean for you having one. I am, however, genuinely sorry that it came off that way. I was dealing with something else at the time that was causing me some frustration and I can see how that clearly influenced the way I worded things and behaved. Truly I am sorry. I edited the comment to be far less hostile and to be more forgiving and fair.

Again, I apologize.

yeah I don't see why the ai would want a pyramid

That frame is probably influenced by this modern belief that Egyptians couldn't have possibly built the pyramids. I'm going to blame one of my favorite shows/movie: Stargate.

oof that hurts. Im not wild about flat earthers or alien conspiracy or such but would I give up good scifi shows to not have that part of humanity. that would be a high price indeed.

Rebooting life to install updates