this post was submitted on 08 Jul 2023

676 points (98.7% liked)

Memes

45680 readers

769 users here now

Rules:

- Be civil and nice.

- Try not to excessively repost, as a rule of thumb, wait at least 2 months to do it if you have to.

founded 5 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

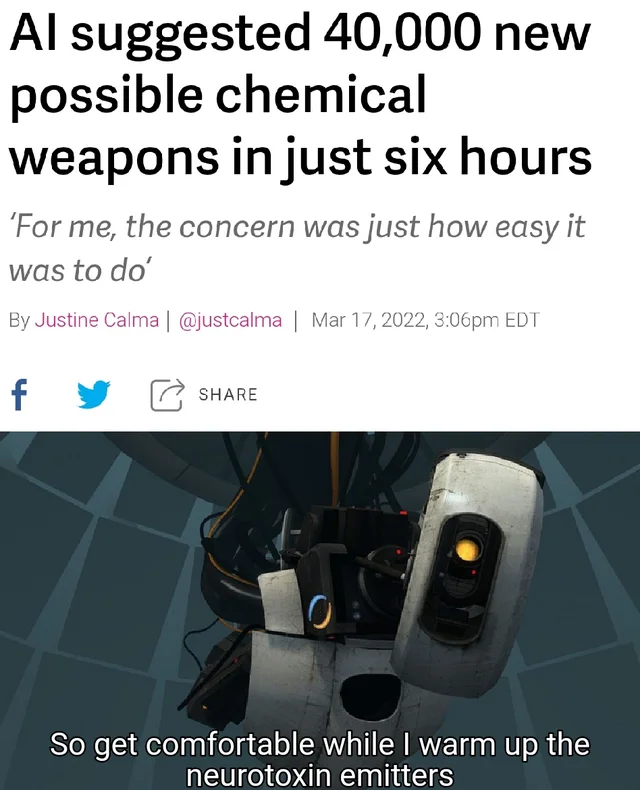

No problem. I'm totally on board with the "LLMs aren't the AI singularity" page. This one is actually kinda scary to me because it shows how easily you can take a model/simulation and instead of asking "how can you improve this?", you can also ask "how can I make this worse?". The same tool used for good can easily be used for bad when you change the "success conditions" around. Now it's not the techs fault, of course. It's a tool and how it's used. But it shows how easily a tool like this can be used in the wrong ways with very little "malicious" action necessary.

The thing is, if you run these tools to find e.g. cures to a disease it will also spit out 40k possible matches and of these there will be a handfull that actually work and become real medicine.

I guess, harming might be a little easier than healing, but claiming that the output is actually 40k working neurotoxins is clickbaity, missleading and incorrect.