this post was submitted on 02 Sep 2023

128 points (97.8% liked)

Rust

5999 readers

21 users here now

Welcome to the Rust community! This is a place to discuss about the Rust programming language.

Wormhole

Credits

- The icon is a modified version of the official rust logo (changing the colors to a gradient and black background)

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

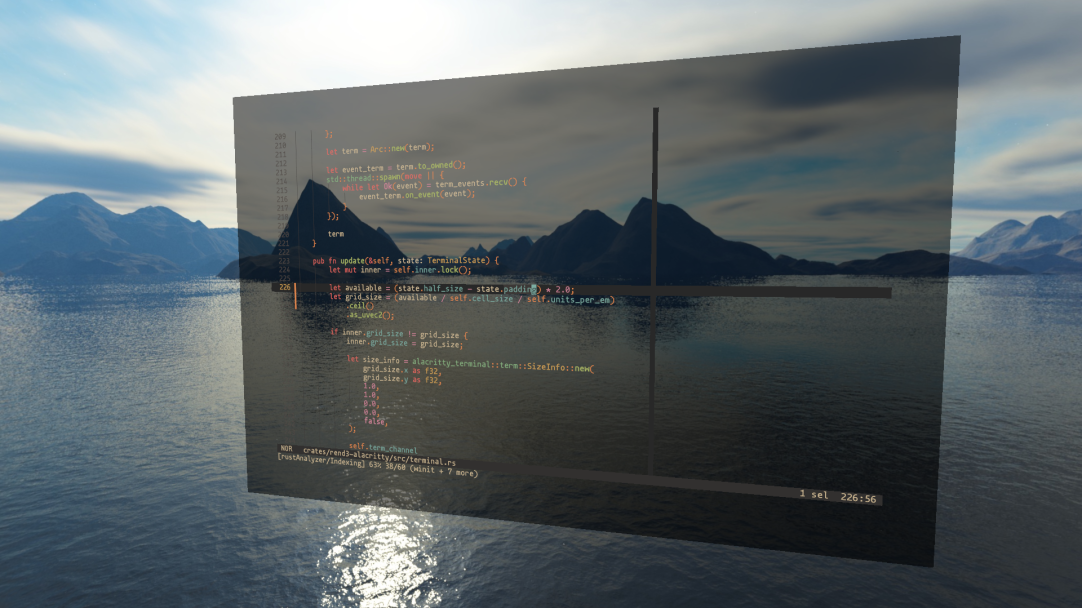

It is a VR project! I needed fast, highly-legible text from a variety of viewing angles and MSDFs fit the bill.

I'm curious, when you say a VR framework, do you mean something like Stereokit?

I don't actually know anything about VR development, I just assumed that you'd be working with something like a game engine to handle the play area, controls, stereo 3D rendering and generally as a platform for using multiple applications in one headset.

Stereokit looks like it's exactly that. I'm wondering, how do you solve those things if you're not using a framework?

The go-to API to use these days for VR (or XR more broadly) is OpenXR, which is a standard for AR/VR developed by the Khronos Group, who also standardized OpenGL and Vulkan. OpenVR is an older, SteamVR-specific VR library that has been phased out in favor of OpenXR. Oculus also had its own Oculus SDK for developing VR apps for both desktop VR and Quest that has also been deprecated for OpenXR.

When you initialize OpenXR, whichever API loader you've instantiated connects to its compositor, which is typically a daemon process. The OpenXR API has extensions for whether you're rendering using OpenGL, Vulkan, DirectX, whatever, and then you hook into the compositor with your graphics-specific extension to exchange GPU objects for compositing.

OpenXR also handles frame synchronization (which is a tricky subject in VR because of how tight latency needs to be to not give the user motion sickness) and input handling, which is compositor- and hardware-specific.

For Rust's purposes, you can find an example of how to plumb OpenXR's low-level graphics to wgpu here: https://github.com/philpax/wgpu-openxr-example

Once you can follow OpenXR's rules for graphics and synchronization correctly, you're off to the races, and there isn't much of a difference, at least on the lowest level, between your XR runtime and any other game engine or simulator.

Stereokit is really cool because it builds on OpenXR with a bunch of useful features like a UI system and virtual hands rendered where your controllers are. It also supports desktop mode.

If you're interested in this kind of stuff I highly recommend reading the OpenXR specification. Despite it being made by the same consortium that wrote the OpenGL and Vulkan specs, the OpenXR spec is highly-readable and is a really good introduction to low-level VR software engineering.

Bro thanks for the intro to vr dev, this is really cool stuff