this post was submitted on 27 Nov 2024

1117 points (96.2% liked)

memes

10698 readers

3251 users here now

Community rules

1. Be civil

No trolling, bigotry or other insulting / annoying behaviour

2. No politics

This is non-politics community. For political memes please go to !politicalmemes@lemmy.world

3. No recent reposts

Check for reposts when posting a meme, you can only repost after 1 month

4. No bots

No bots without the express approval of the mods or the admins

5. No Spam/Ads

No advertisements or spam. This is an instance rule and the only way to live.

Sister communities

- !tenforward@lemmy.world : Star Trek memes, chat and shitposts

- !lemmyshitpost@lemmy.world : Lemmy Shitposts, anything and everything goes.

- !linuxmemes@lemmy.world : Linux themed memes

- !comicstrips@lemmy.world : for those who love comic stories.

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

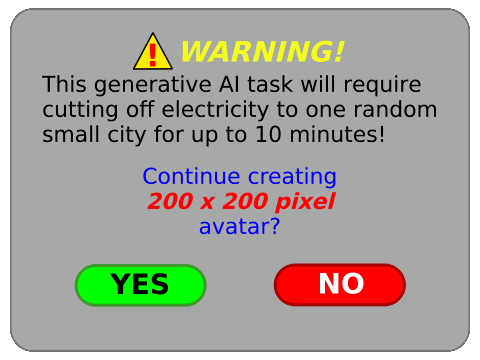

Is Midjourney available for use locally now? Or have you misunderstood Midjourney taking 30 seconds to generate from their server as happening locally?

I know a guy that uses Stable Diffusion XL locally with a few LORAs, last time I've heard one 2k image took 2 minutes on RTX 30 or 40 something

IDK how expensive is Midjourney in comparison, but running it locally doesn't sound impossible

The impossible part is that it isn't publicly available. It's not an open model.

Oh, ok. I'm not into image generation, so I didn't know.

That sounds a bit too much. Generating an sdxl image and then scaling it up is the common procedure, but that should not take 2 minutes on a 40xx card. For reference I can generate 3 batches of 5 images (without the upscaling step) in less than 2 minutes on my 4070ti. And that's without using faster sdxl models like lightning or turbo or whatever.