361

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

this post was submitted on 01 Oct 2024

361 points (91.1% liked)

Programmer Humor

19315 readers

94 users here now

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics

founded 1 year ago

MODERATORS

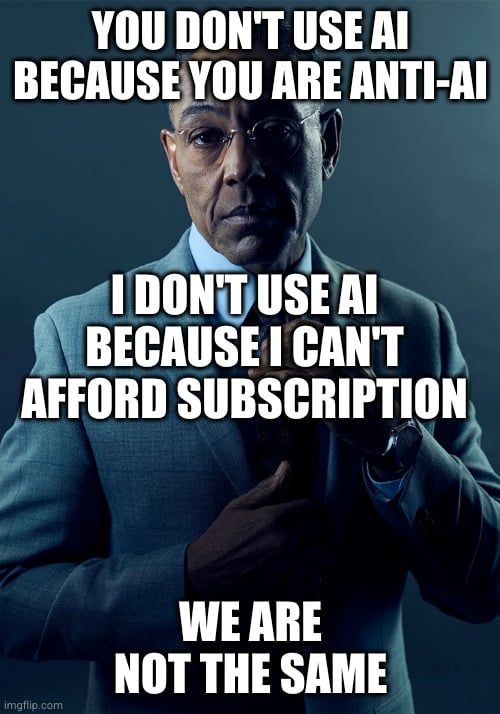

You don't even need a GPU, i can run Ollama Open-WebUI on my CPU with an 8B model fast af

I tried it with my cpu (with llama 3.0 7B), but unfortunately it ran really slow (I got a ryzen 5700x)

I ran it on my dual core celeron and.. just kidding try the mini llama 1B. I'm in the same boat with Ryzen 5000 something cpu