https://arxiv.org/pdf/1706.03762.pdf

Attention Is All You Need

By Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, Illia Polosukhin

Word count: 4221

Estimated read time: 17 minutes

Links:

Summary:

This paper proposes a new neural network architecture called the Transformer that is based solely on attention mechanisms, without using sequence aligned RNNs or convolutions. The Transformer achieves state-of-the-art results in machine translation while being more parallelizable and requiring significantly less time to train. Key contributions:

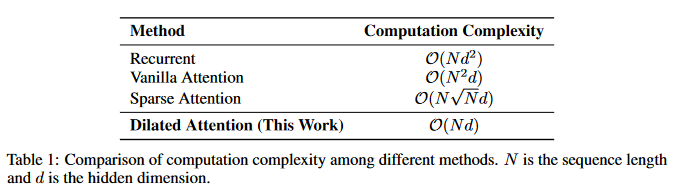

Proposes multi-head self-attention as a replacement for recurrence and convolutions in encoder-decoder architectures. Self-attention connects all positions with a constant number of sequentially executed operations, whereas recurrent layers require O(n) sequential operations.

Introduces scaled dot-product attention, which performs better than additive attention for large values of attention dimension. Applies attention scaling to improve training.

Employs positional encodings instead of recurrence to enable the model to make use of sequence order. Shows that learned positional embeddings can replace sinusoids with negligible loss in quality.

Achieves state-of-the-art BLEU scores on WMT 2014 English-to-German and English-to-French translation at a fraction of the training cost of previous models. Outperforms all previously published models on English constituency parsing with limited training data.

The Transformer's reliance on attention and positional encodings rather than recurrence make it very promising for parallelization and scaling to longer sequences. The results demonstrate the potential of attention-based models to supplant RNNs and CNNs in sequence transduction tasks.

Evaluation:

The Transformer architecture presents several advantages for using large language models and generative adversarial networks:

The Transformer is highly parallelizable since it does away with sequence-aligned RNNs. This makes it very suitable for scaling up with more parameters and data.

The multi-head self-attention provides a way to jointly attend to information from different representation subspaces at different positions, allowing modeling of dependencies regardless of distance. This is useful for long-range dependencies in large contexts.

Positional encodings allow the model to make use of sequence order without recurrence. This can enable generating coherent, ordered outputs in GANs and large LMs.

The Transformer achieves excellent results with limited training data, suggesting its representations transfer well. This is promising for few-shot learning and fine-tuning large LMs.

The paper provides useful analysis into the roles different attention heads learn, which can inform work on interpretable attention-based representations.

Overall, the Transformer architecture seems very promising as a foundation for large scale language modeling and GAN training. The representations it learns appear powerful yet transparent. The results on parsing suggest it can capture linguistic phenomena well. The parallelizability enables scaling. Much follow-on work has already adapted and refined the Transformer, making it very relevant today.