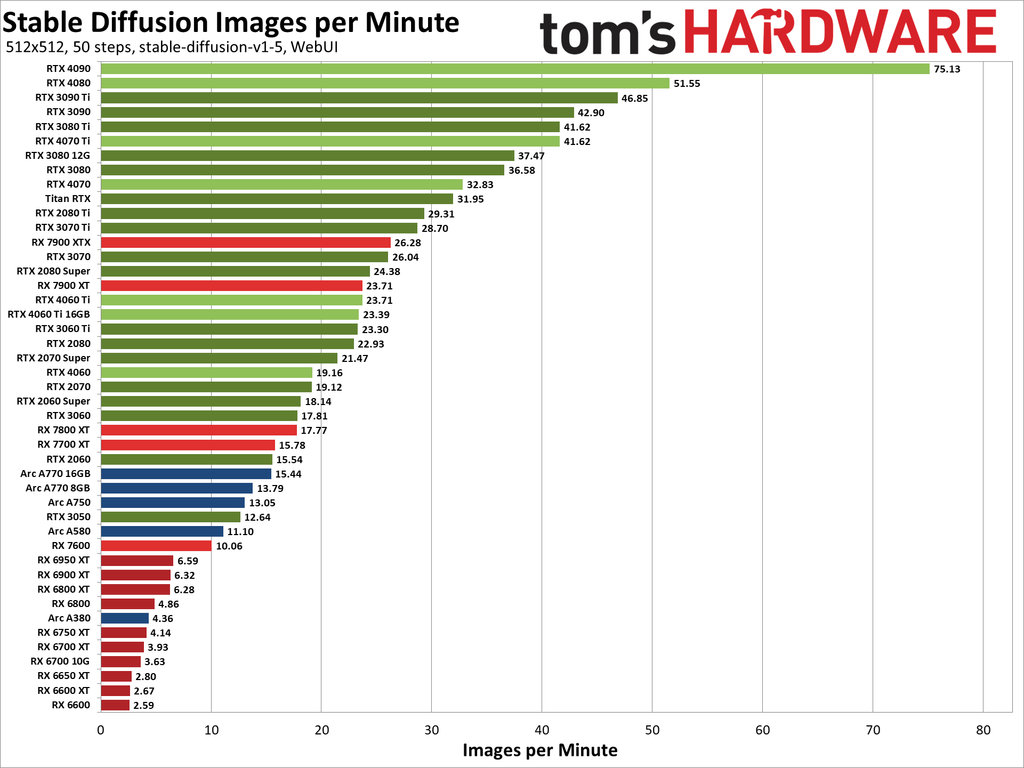

I have currently a RX 6700XT and I'm quite happy with it when it comes to gaming and regular desktop usage, but was recently doing some local ML stuff and was just made aware of huge gap NVIDIA has over AMD in that space.

But yeah, going back to NVIDIA (I used to run 1080) after going AMD... seems kinda dirty for me ;-; Was very happy to move to AMD and be finally be free from the walled garden.

I thought at first to just buy a second GPU and still use my 6700XT for gaming and just use NVIDIA for ML, but unfortunately my motherboard doesn't have 2 PCIe slots I could use for GPUs, so I need to choose. I would be able to buy used RTX 3090 for a fair price, since I don't want to go for current gen, because of the current pricing.

So my question is how is NVIDIA nowadays? I specifically mean Wayland compatibility, since I just recently switched and would suck to go back to Xorg.

Other than that, are there any hurdles, issues, annoyances, or is it smooth and seamless nowadays? Would you upgrade in my case?

EDIT:

Forgot to mention, I'm currently using GNOME on Arch(btw), since that might be relevant