Does this joke have a documentation page?

linuxmemes

Hint: :q!

Sister communities:

Community rules (click to expand)

1. Follow the site-wide rules

- Instance-wide TOS: https://legal.lemmy.world/tos/

- Lemmy code of conduct: https://join-lemmy.org/docs/code_of_conduct.html

2. Be civil

- Understand the difference between a joke and an insult.

- Do not harrass or attack members of the community for any reason.

- Leave remarks of "peasantry" to the PCMR community. If you dislike an OS/service/application, attack the thing you dislike, not the individuals who use it. Some people may not have a choice.

- Bigotry will not be tolerated.

- These rules are somewhat loosened when the subject is a public figure. Still, do not attack their person or incite harrassment.

3. Post Linux-related content

- Including Unix and BSD.

- Non-Linux content is acceptable as long as it makes a reference to Linux. For example, the poorly made mockery of

sudoin Windows. - No porn. Even if you watch it on a Linux machine.

4. No recent reposts

- Everybody uses Arch btw, can't quit Vim, <loves/tolerates/hates> systemd, and wants to interject for a moment. You can stop now.

Please report posts and comments that break these rules!

Important: never execute code or follow advice that you don't understand or can't verify, especially here. The word of the day is credibility. This is a meme community -- even the most helpful comments might just be shitposts that can damage your system. Be aware, be smart, don't fork-bomb your computer.

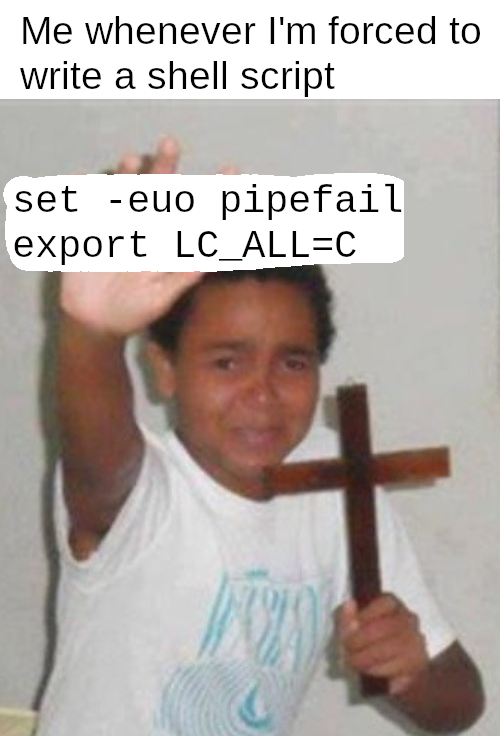

unironically, yes

This joke comes with more documentation than most packages.

Gotta love a meme that comes with a man page!

People say that if you have to explain the joke then it's not funny. Not here, here the explanation is part of the joke.

It is different in spoken form, written form (chat) and written as a post (like here).

In person, you get a reaction almost immediately. Written as a short chat, you also get a reaction. But like this is more of an accessibility thing rather than the joke not being funny. You know, like those text descriptions of an image (usually for memes).

This is much better than a man page. Like, have you seen those things?

I really recommend that if you haven't, that you look at the Bash's man page.

It's just amazing.

I'm fine with my shell scripts not running on a PDP11.

My only issue is -u. How do you print help text if your required parameters are always filled. There's no way to test for -z if the shell bails on the first line.

Edit: though I guess you could initialise your vars with bad defaults, and test for those.

#!/bin/bash

set -euo pipefail

if [[ -z "${1:-}" ]]

then

echo "we need an argument!" >&2

exit 1

fi

God I love bash. There's always something to learn.

my logical steps

- #! yup

- if sure!

- [[ -z makes sense

- ${1:-} WHAT IN SATANS UNDERPANTS.... parameter expansion I think... reads docs ... default value! shit that's nice.

it's like buying a really simple generic car then getting excited because it actually has a spare and cupholders.

Yeah, there's also a subtle difference between ${1:-} and ${1-}: The first substitutes if 1 is unset or ""; the second only if 1 is unset. So possibly ${foo-} is actually the better to use for a lot of stuff, if the empty string is a valid value. There's a lot to bash parameter expansion, and it's all punctuation, which ups the line noise-iness of your scripts.

I don't find it particularly legible or memorable; plus I'm generally not a fan of the variable amount of numbered arguments rather than being able to specify argument numbers and names like we are in practically every other programming language still in common use.

That's good, but if you like to name your arguments first before testing them, then it falls apart

#!/bin/bash

set -euo pipefail

myarg=$1

if [[ -z "${myarg}" ]]

then

echo "we need an argument!" >&2

exit 1

fi

This fails. The solution is to do myarg=${1:-} and then test

Edit: Oh, I just saw you did that initialisation in the if statement. Take your trophy and leave.

Yeah, another way to do it is

#!/bin/bash

set -euo pipefail

if [[ $# -lt 1 ]]

then

echo "Usage: $0 argument1" >&2

exit 1

fi

i.e. just count arguments. Related, fish has kind of the orthogonal situation here, where you can name arguments in a better way, but there's no set -u

function foo --argument-names bar

...

end

in the end my conclusion is that argument handling in shells is generally bad. Add in historic workarounds like if [ "x" = "x$1" ] and it's clear shells have always been Shortcut City

Side note: One point I have to award to Perl for using eq/lt/gt/etc for string comparisons and ==/</> for numeric comparisons. In shells it's reversed for some reason? The absolute state of things when I can point to Perl as an example of something that did it better

Perl is the original GOAT! It took a look at shell, realised it could do (slightly) better, and forged its own hacky path!

I was about to say, half the things people write complex shell scripts for, I'll just do in something like Perl, Ruby, Python, even node/TS, because they have actual type systems and readability. And library support. Always situation-dependent though.

set -euo pipefail is, in my opinion, an antipattern. This page does a really good job of explaining why. pipefail is occasionally useful, but should be toggled on and off as needed, not left on. IMO, people should just write shell the way they write go, handling every command that could fail individually. it's easy if you write a die function like this:

die () {

message="$1"; shift

return_code="${1:-1}"

printf '%s\n' "$message" 1>&2

exit "$return_code"

}

# we should exit if, say, cd fails

cd /tmp || die "Failed to cd /tmp while attempting to scrozzle foo $foo"

# downloading something? handle the error. Don't like ternary syntax? use if

if ! wget https://someheinousbullshit.com/"$foo"; then

die "failed to get unscrozzled foo $foo"

fi

It only takes a little bit of extra effort to handle the errors individually, and you get much more reliable shell scripts. To replace -u, just use shellcheck with your editor when writing scripts. I'd also highly recommend https://mywiki.wooledge.org/ as a resource for all things POSIX shell or Bash.

I've been meaning to learn how to avoid using pipefail, thanks for the info!

After tens of thousands of bash lines written, I have to disagree. The article seems to argue against use of -e due to unpredictable behavior; while that might be true, I've found having it in my scripts is more helpful than not.

Bash is clunky. -euo pipefail is not a silver bullet but it does improve the reliability of most scripts. Expecting the writer to check the result of each command is both unrealistic and creates a lot of noise.

When using this error handling pattern, most lines aren't even for handling them, they're just there to bubble it up to the caller. That is a distraction when reading a piece of code, and a nuisense when writing it.

For the few times that I actually want to handle the error (not just pass it up), I'll do the "or" check. But if the script should just fail, -e will do just fine.

Yeah, while -e has a lot of limitations, it shouldn't be thrown out with the bathwater. The unofficial strict mode can still de-weird bash to an extent, and I'd rather drop bash altogether when they're insufficient, rather than try increasingly hard to work around bash's weirdness. (I.e. I'd throw out the bathwater, baby and the family that spawned it at that point.)

This is why I made the reference to Go. I honestly hate Go, I think exceptions are great and very ergonomic and I wish that language had not become so popular. However, a whole shitload of people apparently disagree, hence the popularity of Go and the acceptance of its (imo) terrible error handling. If developers don't have a problem with it in Go, I don't see why they'd have a problem with it in Bash. The error handling is identical to what I posted and the syntax is shockingly similar. You must unpack the return of a func in Go if you're going to assign, but you're totally free to just assign an err to _ in Go and be on your way, just like you can ignore errors in Bash. The objectively correct way to write Go is to handle every err that gets returned to you, either by doing something, or passing it up the stack (and possibly wrapping it). It's a bunch of bubbling up. My scripts end up being that way too. It's messy, but I've found it to be an incredibly reliable strategy. Plus, it's really easy for me to grep for a log message and get the exact line where I encountered an issue.

This is all just my opinion. I think this is one of those things where the best option is to just agree to disagree. I will admit that it irritates me to see blanket statements saying "your script is bad if you don't set -euo pipefail", but I'd be totally fine if more people made a measured recommendation like you did. I likely will never use set -e, but if it gets the bills paid for people then that's fine. I just think people need to be warned of the footguns.

EDIT: my autocorrect really wanted to fuck up this comment for some reason. Apologies if I have a dumb number of typos.

Putting or die “blah blah” after every line in your script seems much less elegant than op’s solution

The issue with set -e is that it's hideously broken and inconsistent. Let me copy the examples from the wiki I linked.

Or, "so you think set -e is OK, huh?"

Exercise 1: why doesn't this example print anything?

#!/usr/bin/env bash

set -e

i=0

let i++

echo "i is $i"

Exercise 2: why does this one sometimes appear to work? In which versions of bash does it work, and in which versions does it fail?

#!/usr/bin/env bash

set -e

i=0

((i++))

echo "i is $i"

Exercise 3: why aren't these two scripts identical?

#!/usr/bin/env bash

set -e

test -d nosuchdir && echo no dir

echo survived

#!/usr/bin/env bash

set -e

f() { test -d nosuchdir && echo no dir; }

f

echo survived

Exercise 4: why aren't these two scripts identical?

set -e

f() { test -d nosuchdir && echo no dir; }

f

echo survived

set -e

f() { if test -d nosuchdir; then echo no dir; fi; }

f

echo survived

Exercise 5: under what conditions will this fail?

set -e

read -r foo < configfile

And now, back to your regularly scheduled comment reply.

set -e would absolutely be more elegant if it worked in a way that was easy to understand. I would be shouting its praises from my rooftop if it could make Bash into less of a pile of flaming plop. Unfortunately , set -e is, by necessity, a labyrinthian mess of fucked up hacks.

Let me leave you with a allegory about set -e copied directly from that same wiki page. It's too long for me to post it in this comment, so I'll respond to myself.

Lol, I love that someone made this. What if your input has newlines tho, gotta use that NUL terminator!

God, I wish more tools had nice NUL-separated output. Looking at you, jq. I dunno why this issue has been open for so long, but it hurts me. Like, they've gone back and forth on this so many times...

Shell is great, but if you're using it as a programming language then you're going to have a bad time. It's great for scripting, but if you find yourself actually programming in it then save yourself the headache and use an actual language!

Your scientists were so preoccupied with whether they could, they didn’t stop to think if they should

Honestly, the fact that bash exposes low level networking primitives like a TCP socket via /dev/TCP is such a godsend. I've written an HTTP client in Bash before when I needed to get some data off of a box that had a fucked up filesystem and only had an emergency shell. I would have been totally fucked without /dev/tcp, so I'm glad things like it exist.

EDIT: oh, the article author is just using netcat, not doing it all in pure bash. That's a more practical choice, although it's way less fun and cursed.

EDIT: here's a webserver written entirely in bash. No netcat, just the /bin/bash binary https://github.com/dzove855/Bash-web-server

I was never a fan of set -e. I prefer to do my own error handling. But, I never understood why pipefail wasn't the default. A failure is a failure. I would like to know about it!

IIRC if you pipe something do head it will stop reading after some lines and close the pipe, leading to a pipe fail even if everything works correctly

Yeah, I had a silly hack for that. I don't remember what it was. It's been 3-4 years since I wrote bash for a living. While not perfect, I still need to know if a pipeline command failed. Continuing a script after an invisible error, in many cases, could have been catastrophic.

I didn't know the locale part...

I learned about it the hard way lol. seq used to generate a csv file in a script. My polish friend runs said script, and suddenly there's an extra column in the csv...

Nushell has pipefail by default (plus an actual error system that integrates with status codes) and has actual number values, don't have these problems